[深度学习] 使用深度学习开发的循线小车-程序员宅基地

ubuntu 安装 docker_ubuntu 如何知道已经安装了docker-程序员宅基地

CentOS7的Docker无法拉取镜像_docker查找不到centos7镜像-程序员宅基地

ubuntu 安装 docker_ubuntu 如何知道已经安装了docker-程序员宅基地

【Python】Pytorch分类模型转onnx以及onnx模型推理-程序员宅基地

OriginBot智能机器人开源套件|23.视觉巡线(AI深度学习) - 知乎

ubuntu22.04新机配置深度学习环境(一遍成) - 知乎

完成Docker环境安装后,需要将无root权限的用户添加到Docker用户组中。参考如下命令:

sudo groupadd docker

sudo gpasswd -a ${USER} docker

sudo systemctl restart docker # CentOS7/Ubuntu

# re-login模型训练

以上提到的模型可以直接复用pytorch中的定义,数据集的切分和模型的训练,都封装在 line_follower_model 功能包的代码中。

接下来,运行如下指令,开始训练:

cd ~/dev_ws/src/originbot_desktop/originbot_deeplearning/line_follower_model

ros2 run line_follower_model training报错: ./best_line_follower_model_xy.pth cannot be opened

thomas@thomas-J20:~/dev_ws/src/originbot_desktop/originbot_deeplearning/line_follower_model$ ros2 run line_follower_model training

/home/thomas/.local/lib/python3.10/site-packages/torchvision/models/_utils.py:208: UserWarning: The parameter 'pretrained' is deprecated since 0.13 and may be removed in the future, please use 'weights' instead.

warnings.warn(

/home/thomas/.local/lib/python3.10/site-packages/torchvision/models/_utils.py:223: UserWarning: Arguments other than a weight enum or `None` for 'weights' are deprecated since 0.13 and may be removed in the future. The current behavior is equivalent to passing `weights=ResNet18_Weights.IMAGENET1K_V1`. You can also use `weights=ResNet18_Weights.DEFAULT` to get the most up-to-date weights.

warnings.warn(msg)

Downloading: "https://download.pytorch.org/models/resnet18-f37072fd.pth" to /home/thomas/.cache/torch/hub/checkpoints/resnet18-f37072fd.pth

100.0%

0.672721, 30.660010

save

Traceback (most recent call last):

File "/home/thomas/dev_ws/install/line_follower_model/lib/line_follower_model/training", line 33, in <module>

sys.exit(load_entry_point('line-follower-model==0.0.0', 'console_scripts', 'training')())

File "/home/thomas/dev_ws/install/line_follower_model/lib/python3.10/site-packages/line_follower_model/training_member_function.py", line 131, in main

torch.save(model.state_dict(), BEST_MODEL_PATH)

File "/home/thomas/.local/lib/python3.10/site-packages/torch/serialization.py", line 628, in save

with _open_zipfile_writer(f) as opened_zipfile:

File "/home/thomas/.local/lib/python3.10/site-packages/torch/serialization.py", line 502, in _open_zipfile_writer

return container(name_or_buffer)

File "/home/thomas/.local/lib/python3.10/site-packages/torch/serialization.py", line 473, in __init__

super().__init__(torch._C.PyTorchFileWriter(self.name))

RuntimeError: File ./best_line_follower_model_xy.pth cannot be opened.

这是由于没有文件夹的写权限

thomas@thomas-J20:~/dev_ws/src/originbot_desktop/originbot_deeplearning$ ls -l

total 8

drwxr-xr-x 3 root root 4096 Mar 27 11:03 10_model_convert

drwxr-xr-x 7 root root 4096 Mar 27 14:29 line_follower_model

thomas@thomas-J20:~/dev_ws/src/originbot_desktop/originbot_deeplearning$ sudo chmod 777 *

[sudo] password for thomas:

thomas@thomas-J20:~/dev_ws/src/originbot_desktop/originbot_deeplearning$ ls

10_model_convert line_follower_model

thomas@thomas-J20:~/dev_ws/src/originbot_desktop/originbot_deeplearning$ ls -l

total 8

drwxrwxrwx 3 root root 4096 Mar 27 11:03 10_model_convert

drwxrwxrwx 7 root root 4096 Mar 27 14:29 line_follower_model

再次执行

ros2 run line_follower_model trainingthomas@thomas-J20:~/dev_ws/src/originbot_desktop/originbot_deeplearning/line_follower_model$ ros2 run line_follower_model training

/home/thomas/.local/lib/python3.10/site-packages/torchvision/models/_utils.py:208: UserWarning: The parameter 'pretrained' is deprecated since 0.13 and may be removed in the future, please use 'weights' instead.

warnings.warn(

/home/thomas/.local/lib/python3.10/site-packages/torchvision/models/_utils.py:223: UserWarning: Arguments other than a weight enum or `None` for 'weights' are deprecated since 0.13 and may be removed in the future. The current behavior is equivalent to passing `weights=ResNet18_Weights.IMAGENET1K_V1`. You can also use `weights=ResNet18_Weights.DEFAULT` to get the most up-to-date weights.

warnings.warn(msg)

0.722548, 6.242182

save

0.087550, 5.827808

save

0.045032, 0.380008

save

0.032235, 0.111976

save

0.027896, 0.039962

save

0.030725, 0.204738

0.025075, 0.036258

save

0.028099, 0.040965

0.016858, 0.032197

save

0.019491, 0.036230

0.018325, 0.043560

0.019858, 0.322563

0.015115, 0.070269

0.014820, 0.030373

模型训练过程需要一段时间,几十分钟或者一个小时,需要耐心等待,完成后可以看到生成的文件 best_line_follower_model_xy.pth

thomas@thomas-J20:~/dev_ws/src/originbot_desktop/originbot_deeplearning/line_follower_model$ ls -l

total 54892

-rw-rw-r-- 1 thomas thomas 44789846 Mar 28 13:28 best_line_follower_model_xy.pth

模型转换

pytorch训练得到的浮点模型如果直接运行在RDK X3上效率会很低,为了提高运行效率,发挥BPU的5T算力,这里需要进行浮点模型转定点模型操作。

生成onnx模型

接下来执行 generate_onnx 将之前训练好的模型,转换成 onnx 模型:

ros2 run line_follower_model generate_onnx运行后在当前目录下得到生成 best_line_follower_model_xy.onnx 模型

thomas@J-35:~/dev_ws/src/originbot_desktop/originbot_deeplearning/line_follower_model$ ls -l

total 98556

-rw-rw-r-- 1 thomas thomas 44700647 Apr 2 21:02 best_line_follower_model_xy.onnx

-rw-rw-r-- 1 thomas thomas 44789846 Apr 2 19:37 best_line_follower_model_xy.pth

启动AI工具链docker

解压缩之前下载好的AI工具链的docker镜像和OE包,OE包目录结构如下:

.

├── bsp

│ └── X3J3-Img-PL2.2-V1.1.0-20220324.tgz

├── ddk

│ ├── package

│ ├── samples

│ └── tools

├── doc

│ ├── cn

│ ├── ddk_doc

│ └── en

├── release_note-CN.txt

├── release_note-EN.txt

├── run_docker.sh

└── tools

├── 0A_CP210x_USB2UART_Driver.zip

├── 0A_PL2302-USB-to-Serial-Comm-Port.zip

├── 0A_PL2303-M_LogoDriver_Setup_v202_20200527.zip

├── 0B_hbupdate_burn_secure-key1.zip

├── 0B_hbupdate_linux_cli_v1.1.tgz

├── 0B_hbupdate_linux_gui_v1.1.tgz

├── 0B_hbupdate_mac_v1.0.5.app.tar.gz

└── 0B_hbupdate_win64_v1.1.zip

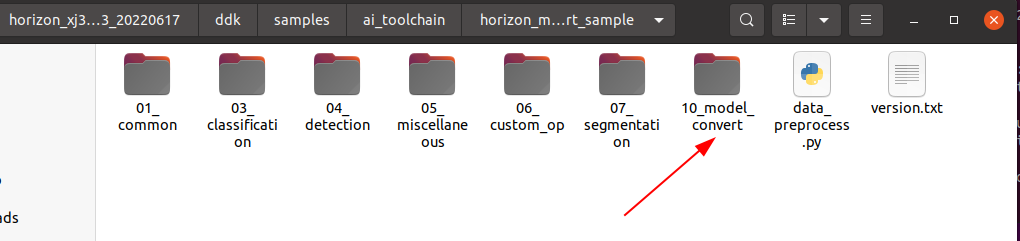

将 originbot_desktop 代码仓库中的 10_model_convert 包拷贝到至OE开发包 ddk/samples/ai_toolchain/horizon_model_convert_sample/03_classification/ 目录下。

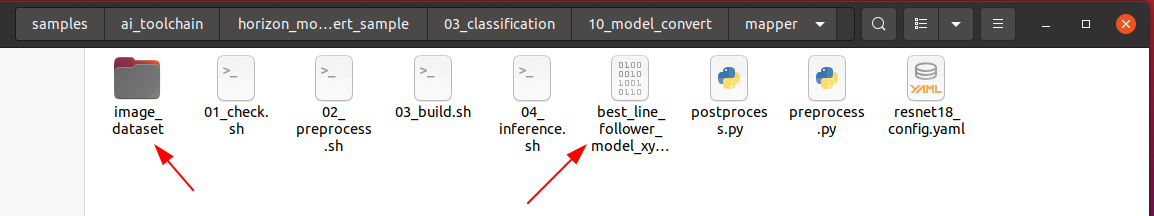

再把 line_follower_model 功能包下标注好的数据集文件夹 image_dataset 和生成的 best_line_follower_model_xy.onnx 模型拷贝到以上 ddk/samples/ai_toolchain/horizon_model_convert_sample/03_classification/10_model_convert/mapper/ 目录下,数据集文件夹 image_dataset 保留100张左右的数据用于校准:

然后回到OE包的根目录下,加载AI工具链的docker镜像:

cd /home/thomas/Me/deeplearning/horizon_xj3_open_explorer_v2.3.3_20220727/

sh run_docker.sh /data/

生成校准数据

在启动的Docker镜像中,完成如下操作:

cd ddk/samples/ai_toolchain/horizon_model_convert_sample/03_classification/10_model_convert/mapper

sh 02_preprocess.sh

命令执行过程如下:

thomas@J-35:~/Me/deeplearning/horizon_xj3_open_explorer_v2.3.3_20220727$ sudo sh run_docker.sh /data/

[sudo] password for thomas:

run_docker.sh: 14: [: unexpected operator

run_docker.sh: 23: [: openexplorer/ai_toolchain_centos_7_xj3: unexpected operator

docker version is v2.3.3

dataset path is /data

open_explorer folder path is /home/thomas/Me/deeplearning/horizon_xj3_open_explorer_v2.3.3_20220727

[root@1e1a1a7e24f4 open_explorer]# cd ddk/samples/ai_toolchain/horizon_model_convert_sample/03_classification/10_model_convert/mapper

[root@1e1a1a7e24f4 mapper]# sh 02_preprocess.sh

cd $(dirname $0) || exit

python3 ../../../data_preprocess.py \

--src_dir ./image_dataset \

--dst_dir ./calibration_data_bgr_f32 \

--pic_ext .rgb \

--read_mode opencv

Warning please note that the data type is now determined by the name of the folder suffix

Warning if you need to set it explicitly, please configure the value of saved_data_type in the preprocess shell script

regular preprocess

write:./calibration_data_bgr_f32/xy_008_160_31a8e30a-eca6-11ee-bb07-dfd665df7b81.rgb

write:./calibration_data_bgr_f32/xy_009_160_39c18c40-eca6-11ee-bb07-dfd665df7b81.rgb

write:./calibration_data_bgr_f32/xy_028_092_3327df66-ec9b-11ee-bb07-dfd665df7b81.rgb

模型编译生成定点模型

接下来执行以下命令生成定点模型文件,稍后会在机器人上部署:

cd ddk/samples/ai_toolchain/horizon_model_convert_sample/03_classification/10_model_convert/mapper

sh 03_build.sh命令执行过程如下:

[root@1e1a1a7e24f4 mapper]# sh 03_build.sh

2024-04-02 21:46:50,078 INFO Start hb_mapper....

2024-04-02 21:46:50,079 INFO log will be stored in /open_explorer/ddk/samples/ai_toolchain/horizon_model_convert_sample/03_classification/10_model_convert/mapper/hb_mapper_makertbin.log

2024-04-02 21:46:50,079 INFO hbdk version 3.37.2

2024-04-02 21:46:50,080 INFO horizon_nn version 0.14.0

2024-04-02 21:46:50,080 INFO hb_mapper version 1.9.9

2024-04-02 21:46:50,081 INFO Start Model Convert....

2024-04-02 21:46:50,100 INFO Using abs path /open_explorer/ddk/samples/ai_toolchain/horizon_model_convert_sample/03_classification/10_model_convert/mapper/best_line_follower_model_xy.onnx

2024-04-02 21:46:50,102 INFO validating model_parameters...

2024-04-02 21:46:50,231 WARNING User input 'log_level' deleted,Please do not use this parameter again

2024-04-02 21:46:50,231 INFO Using abs path /open_explorer/ddk/samples/ai_toolchain/horizon_model_convert_sample/03_classification/10_model_convert/mapper/model_output

2024-04-02 21:46:50,232 INFO validating model_parameters finished

2024-04-02 21:46:50,232 INFO validating input_parameters...

2024-04-02 21:46:50,232 INFO input num is set to 1 according to input_names

2024-04-02 21:46:50,233 INFO model name missing, using model name from model file: ['input']

2024-04-02 21:46:50,233 INFO model input shape missing, using shape from model file: [[1, 3, 224, 224]]

2024-04-02 21:46:50,233 INFO validating input_parameters finished

2024-04-02 21:46:50,233 INFO validating calibration_parameters...

2024-04-02 21:46:50,233 INFO Using abs path /open_explorer/ddk/samples/ai_toolchain/horizon_model_convert_sample/03_classification/10_model_convert/mapper/calibration_data_bgr_f32

2024-04-02 21:46:50,234 INFO validating calibration_parameters finished

2024-04-02 21:46:50,234 INFO validating custom_op...

2024-04-02 21:46:50,234 INFO custom_op does not exist, skipped

2024-04-02 21:46:50,234 INFO validating custom_op finished

2024-04-02 21:46:50,234 INFO validating compiler_parameters...

2024-04-02 21:46:50,235 INFO validating compiler_parameters finished

2024-04-02 21:46:50,239 WARNING Please note that the calibration file data type is set to float32, determined by the name of the calibration dir name suffix

2024-04-02 21:46:50,239 WARNING if you need to set it explicitly, please configure the value of cal_data_type in the calibration_parameters group in yaml

2024-04-02 21:46:50,240 INFO *******************************************

2024-04-02 21:46:50,240 INFO First calibration picture name: xy_008_160_31a8e30a-eca6-11ee-bb07-dfd665df7b81.rgb

2024-04-02 21:46:50,240 INFO First calibration picture md5:

83281dbdee2db08577524faa7f892adf /open_explorer/ddk/samples/ai_toolchain/horizon_model_convert_sample/03_classification/10_model_convert/mapper/calibration_data_bgr_f32/xy_008_160_31a8e30a-eca6-11ee-bb07-dfd665df7b81.rgb

2024-04-02 21:46:50,265 INFO *******************************************

2024-04-02 21:46:51,682 INFO [Tue Apr 2 21:46:51 2024] Start to Horizon NN Model Convert.

2024-04-02 21:46:51,683 INFO Parsing the input parameter:{'input': {'input_shape': [1, 3, 224, 224], 'expected_input_type': 'YUV444_128', 'original_input_type': 'RGB', 'original_input_layout': 'NCHW', 'means': array([123.675, 116.28 , 103.53 ], dtype=float32), 'scales': array([0.0171248, 0.017507 , 0.0174292], dtype=float32)}}

2024-04-02 21:46:51,684 INFO Parsing the calibration parameter

2024-04-02 21:46:51,684 INFO Parsing the hbdk parameter:{'hbdk_pass_through_params': '--fast --O3', 'input-source': {'input': 'pyramid', '_default_value': 'ddr'}}

2024-04-02 21:46:51,685 INFO HorizonNN version: 0.14.0

2024-04-02 21:46:51,685 INFO HBDK version: 3.37.2

2024-04-02 21:46:51,685 INFO [Tue Apr 2 21:46:51 2024] Start to parse the onnx model.

2024-04-02 21:46:51,770 INFO Input ONNX model infomation:

ONNX IR version: 6

Opset version: 11

Producer: pytorch2.2.2

Domain: none

Input name: input, [1, 3, 224, 224]

Output name: output, [1, 2]

2024-04-02 21:46:52,323 INFO [Tue Apr 2 21:46:52 2024] End to parse the onnx model.

2024-04-02 21:46:52,324 INFO Model input names: ['input']

2024-04-02 21:46:52,324 INFO Create a preprocessing operator for input_name input with means=[123.675 116.28 103.53 ], std=[58.39484253 57.12000948 57.37498298], original_input_layout=NCHW, color convert from 'RGB' to 'YUV_BT601_FULL_RANGE'.

2024-04-02 21:46:52,750 INFO Saving the original float model: resnet18_224x224_nv12_original_float_model.onnx.

2024-04-02 21:46:52,751 INFO [Tue Apr 2 21:46:52 2024] Start to optimize the model.

2024-04-02 21:46:53,782 INFO [Tue Apr 2 21:46:53 2024] End to optimize the model.

2024-04-02 21:46:53,953 INFO Saving the optimized model: resnet18_224x224_nv12_optimized_float_model.onnx.

2024-04-02 21:46:53,953 INFO [Tue Apr 2 21:46:53 2024] Start to calibrate the model.

2024-04-02 21:46:53,954 INFO There are 100 samples in the calibration data set.

2024-04-02 21:46:54,458 INFO Run calibration model with kl method.

2024-04-02 21:47:06,290 INFO [Tue Apr 2 21:47:06 2024] End to calibrate the model.

2024-04-02 21:47:06,291 INFO [Tue Apr 2 21:47:06 2024] Start to quantize the model.

2024-04-02 21:47:09,926 INFO input input is from pyramid. Its layout is set to NHWC

2024-04-02 21:47:10,502 INFO [Tue Apr 2 21:47:10 2024] End to quantize the model.

2024-04-02 21:47:11,101 INFO Saving the quantized model: resnet18_224x224_nv12_quantized_model.onnx.

2024-04-02 21:47:14,165 INFO [Tue Apr 2 21:47:14 2024] Start to compile the model with march bernoulli2.

2024-04-02 21:47:15,502 INFO Compile submodel: main_graph_subgraph_0

2024-04-02 21:47:16,985 INFO hbdk-cc parameters:['--fast', '--O3', '--input-layout', 'NHWC', '--output-layout', 'NHWC', '--input-source', 'pyramid']

2024-04-02 21:47:17,276 INFO INFO: "-j" or "--jobs" is not specified, launch 2 threads for optimization

2024-04-02 21:47:17,277 WARNING missing stride for pyramid input[0], use its aligned width by default.

[==================================================] 100%

2024-04-02 21:47:25,296 INFO consumed time 8.06245

2024-04-02 21:47:25,555 INFO FPS=121.27, latency = 8246.2 us (see main_graph_subgraph_0.html)

2024-04-02 21:47:25,895 INFO [Tue Apr 2 21:47:25 2024] End to compile the model with march bernoulli2.

2024-04-02 21:47:25,896 INFO The converted model node information:

========================================================================================================================================

Node ON Subgraph Type Cosine Similarity Threshold

----------------------------------------------------------------------------------------------------------------------------------------

HZ_PREPROCESS_FOR_input BPU id(0) HzSQuantizedPreprocess 0.999952 127.000000

/conv1/Conv BPU id(0) HzSQuantizedConv 0.999723 3.186383

/maxpool/MaxPool BPU id(0) HzQuantizedMaxPool 0.999790 3.562476

/layer1/layer1.0/conv1/Conv BPU id(0) HzSQuantizedConv 0.999393 3.562476

/layer1/layer1.0/conv2/Conv BPU id(0) HzSQuantizedConv 0.999360 2.320694

/layer1/layer1.1/conv1/Conv BPU id(0) HzSQuantizedConv 0.997865 5.567303

/layer1/layer1.1/conv2/Conv BPU id(0) HzSQuantizedConv 0.998228 2.442273

/layer2/layer2.0/conv1/Conv BPU id(0) HzSQuantizedConv 0.995588 6.622376

/layer2/layer2.0/conv2/Conv BPU id(0) HzSQuantizedConv 0.996943 3.076967

/layer2/layer2.0/downsample/downsample.0/Conv BPU id(0) HzSQuantizedConv 0.997177 6.622376

/layer2/layer2.1/conv1/Conv BPU id(0) HzSQuantizedConv 0.996080 3.934074

/layer2/layer2.1/conv2/Conv BPU id(0) HzSQuantizedConv 0.997443 3.025215

/layer3/layer3.0/conv1/Conv BPU id(0) HzSQuantizedConv 0.998448 4.853349

/layer3/layer3.0/conv2/Conv BPU id(0) HzSQuantizedConv 0.998819 2.553357

/layer3/layer3.0/downsample/downsample.0/Conv BPU id(0) HzSQuantizedConv 0.998717 4.853349

/layer3/layer3.1/conv1/Conv BPU id(0) HzSQuantizedConv 0.998631 3.161120

/layer3/layer3.1/conv2/Conv BPU id(0) HzSQuantizedConv 0.998802 2.501193

/layer4/layer4.0/conv1/Conv BPU id(0) HzSQuantizedConv 0.999474 5.645166

/layer4/layer4.0/conv2/Conv BPU id(0) HzSQuantizedConv 0.999709 2.401657

/layer4/layer4.0/downsample/downsample.0/Conv BPU id(0) HzSQuantizedConv 0.999250 5.645166

/layer4/layer4.1/conv1/Conv BPU id(0) HzSQuantizedConv 0.999808 5.394126

/layer4/layer4.1/conv2/Conv BPU id(0) HzSQuantizedConv 0.999865 3.072157

/avgpool/GlobalAveragePool BPU id(0) HzSQuantizedConv 0.999965 17.365398

/fc/Gemm BPU id(0) HzSQuantizedConv 0.999967 2.144315

/fc/Gemm_NHWC2NCHW_LayoutConvert_Output0_reshape CPU -- Reshape

2024-04-02 21:47:25,897 INFO The quantify model output:

===========================================================================

Node Cosine Similarity L1 Distance L2 Distance Chebyshev Distance

---------------------------------------------------------------------------

/fc/Gemm 0.999967 0.007190 0.005211 0.008810

2024-04-02 21:47:25,898 INFO [Tue Apr 2 21:47:25 2024] End to Horizon NN Model Convert.

2024-04-02 21:47:26,084 INFO start convert to *.bin file....

2024-04-02 21:47:26,183 INFO ONNX model output num : 1

2024-04-02 21:47:26,184 INFO ############# model deps info #############

2024-04-02 21:47:26,185 INFO hb_mapper version : 1.9.9

2024-04-02 21:47:26,185 INFO hbdk version : 3.37.2

2024-04-02 21:47:26,185 INFO hbdk runtime version: 3.14.14

2024-04-02 21:47:26,186 INFO horizon_nn version : 0.14.0

2024-04-02 21:47:26,186 INFO ############# model_parameters info #############

2024-04-02 21:47:26,186 INFO onnx_model : /open_explorer/ddk/samples/ai_toolchain/horizon_model_convert_sample/03_classification/10_model_convert/mapper/best_line_follower_model_xy.onnx

2024-04-02 21:47:26,186 INFO BPU march : bernoulli2

2024-04-02 21:47:26,187 INFO layer_out_dump : False

2024-04-02 21:47:26,187 INFO log_level : DEBUG

2024-04-02 21:47:26,187 INFO working dir : /open_explorer/ddk/samples/ai_toolchain/horizon_model_convert_sample/03_classification/10_model_convert/mapper/model_output

2024-04-02 21:47:26,187 INFO output_model_file_prefix: resnet18_224x224_nv12

2024-04-02 21:47:26,188 INFO ############# input_parameters info #############

2024-04-02 21:47:26,188 INFO ------------------------------------------

2024-04-02 21:47:26,188 INFO ---------input info : input ---------

2024-04-02 21:47:26,189 INFO input_name : input

2024-04-02 21:47:26,189 INFO input_type_rt : nv12

2024-04-02 21:47:26,189 INFO input_space&range : regular

2024-04-02 21:47:26,189 INFO input_layout_rt : None

2024-04-02 21:47:26,190 INFO input_type_train : rgb

2024-04-02 21:47:26,190 INFO input_layout_train : NCHW

2024-04-02 21:47:26,190 INFO norm_type : data_mean_and_scale

2024-04-02 21:47:26,191 INFO input_shape : 1x3x224x224

2024-04-02 21:47:26,191 INFO mean_value : 123.675,116.28,103.53,

2024-04-02 21:47:26,191 INFO scale_value : 0.0171248,0.017507,0.0174292,

2024-04-02 21:47:26,192 INFO cal_data_dir : /open_explorer/ddk/samples/ai_toolchain/horizon_model_convert_sample/03_classification/10_model_convert/mapper/calibration_data_bgr_f32

2024-04-02 21:47:26,192 INFO ---------input info : input end -------

2024-04-02 21:47:26,192 INFO ------------------------------------------

2024-04-02 21:47:26,192 INFO ############# calibration_parameters info #############

2024-04-02 21:47:26,193 INFO preprocess_on : False

2024-04-02 21:47:26,193 INFO calibration_type: : kl

2024-04-02 21:47:26,193 INFO cal_data_type : N/A

2024-04-02 21:47:26,194 INFO ############# compiler_parameters info #############

2024-04-02 21:47:26,194 INFO hbdk_pass_through_params: --fast --O3

2024-04-02 21:47:26,194 INFO input-source : {'input': 'pyramid', '_default_value': 'ddr'}

2024-04-02 21:47:26,226 INFO Convert to runtime bin file sucessfully!

2024-04-02 21:47:26,226 INFO End Model Convert

[root@1e1a1a7e24f4 mapper]#

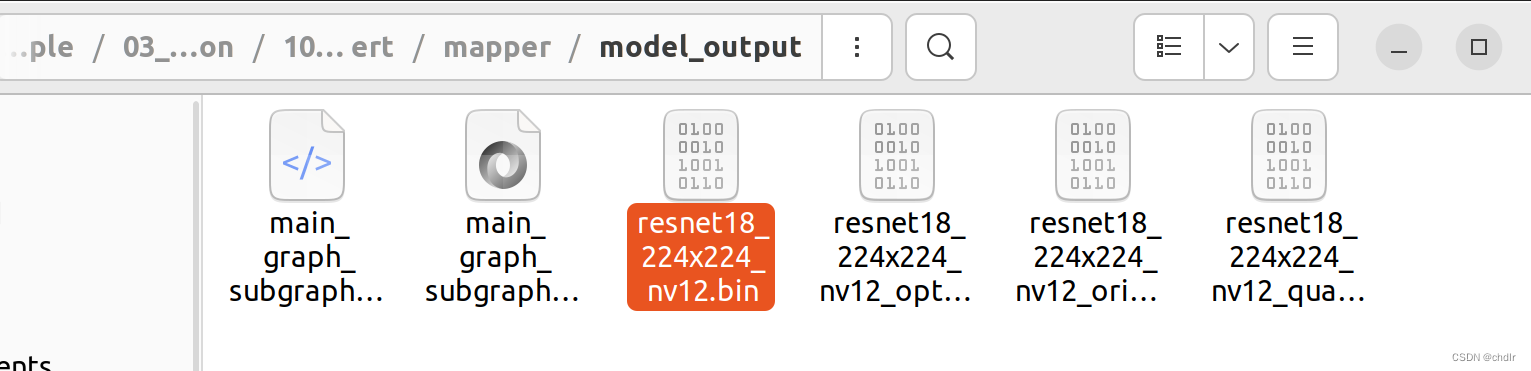

编译成功后,会在 model_output 路径下生成最终的模型文件 resnet18_224x224_nv12.bin

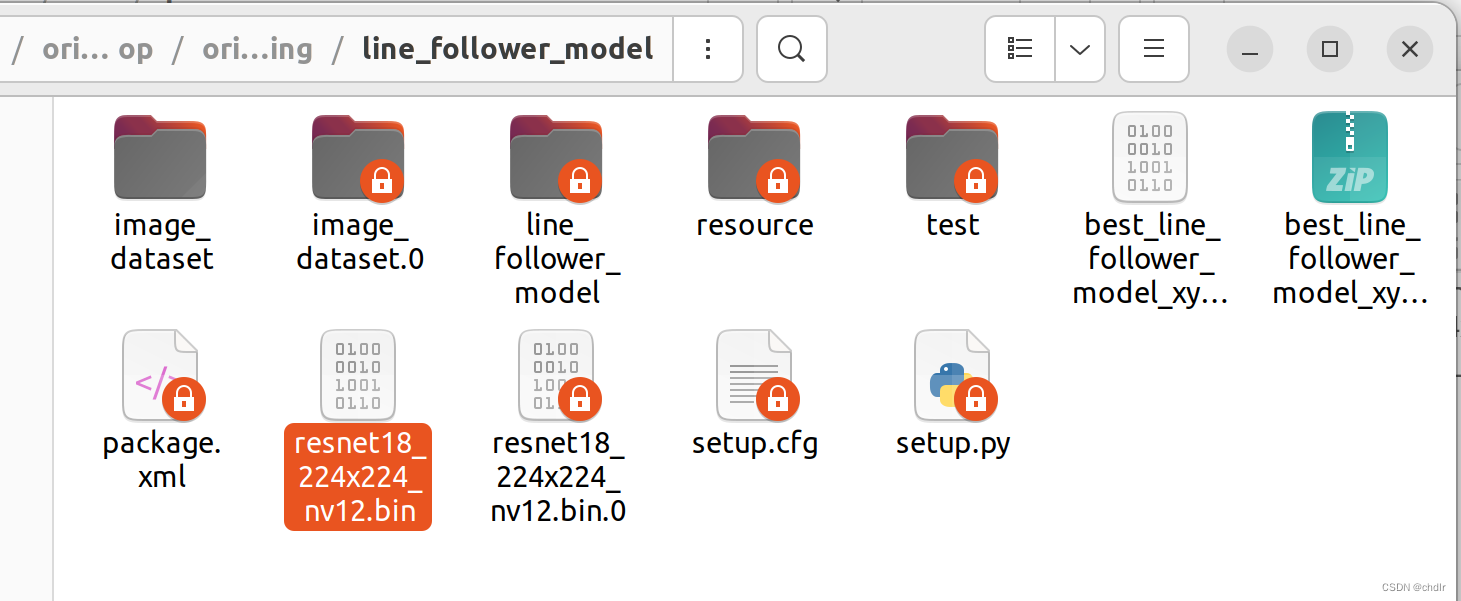

拷贝模型文件 resnet18_224x224_nv12.bin 到 line_follower_model 功能包里,以备后续部署使用。

模型部署

将编译生成的定点模型 resnet18_224x224_nv12.bin,拷贝到OriginCar端 line_follower_perception 功能包下的 model 文件夹中,替换原有的模型,并且在OriginCar端重新编译工作空间。

scp -r ./resnet18_224x224_nv12.bin [email protected]:/root/dev_ws/src/origincar/origincar_deeplearning/line_follower_perception/model/

编译完成后,就可以通过以下命令部署模型,其中参数 model_path 和 model_name 指定模型的路径和名称:

cd /root/dev_ws/src/origincar/origincar_deeplearning/line_follower_perception/

ros2 run line_follower_perception line_follower_perception --ros-args -p model_path:=model/resnet18_224x224_nv12.bin -p model_name:=resnet18_224x224_nv12

命令执行过程如下:

root@ubuntu:~/dev_ws/src/origincar/origincar_deeplearning/line_follower_perception# ros2 run line_follower_perception line_follower_perception --ros-args -p model_path:=model/resnet18_224x224_nv12.bin -p model_name:=resnet18_224x224_nv12

[INFO] [1712122458.232674628] [dnn]: Node init.

[INFO] [1712122458.233179215] [LineFollowerPerceptionNode]: path:model/resnet18_224x224_nv12.bin

[INFO] [1712122458.233256001] [LineFollowerPerceptionNode]: name:resnet18_224x224_nv12

[INFO] [1712122458.233340036] [dnn]: Model init.

[EasyDNN]: EasyDNN version = 1.6.1_(1.18.6 DNN)

[BPU_PLAT]BPU Platform Version(1.3.3)!

[HBRT] set log level as 0. version = 3.15.25.0

[DNN] Runtime version = 1.18.6_(3.15.25 HBRT)

[A][DNN][packed_model.cpp:234][Model](2024-04-03,13:34:18.775.957) [HorizonRT] The model builder version = 1.9.9

[INFO] [1712122458.918322553] [dnn]: The model input 0 width is 224 and height is 224

[INFO] [1712122458.918465125] [dnn]: Task init.

[INFO] [1712122458.920699164] [dnn]: Set task_num [4]

启动相机

先将OriginCar放置到巡线的场景中。

通过如下命令,启动零拷贝模式下的摄像头驱动,加速内部的图像处理效率:

export RMW_IMPLEMENTATION=rmw_cyclonedds_cpp

export CYCLONEDDS_URI='<CycloneDDS><Domain><General><NetworkInterfaceAddress>wlan0</NetworkInterfaceAddress></General></Domain></CycloneDDS>'

ros2 launch origincar_bringup usb_websocket_display.launch.py

相机启动成功后,就可以在巡线终端中看到动态识别的路径线位置了:

启动机器人

启动OriginCar底盘,机器人开始自主寻线运动:

ros2 launch origincar_base origincar_bringup.launch.py 智能推荐

稀疏编码的数学基础与理论分析-程序员宅基地

文章浏览阅读290次,点赞8次,收藏10次。1.背景介绍稀疏编码是一种用于处理稀疏数据的编码技术,其主要应用于信息传输、存储和处理等领域。稀疏数据是指数据中大部分元素为零或近似于零的数据,例如文本、图像、音频、视频等。稀疏编码的核心思想是将稀疏数据表示为非零元素和它们对应的位置信息,从而减少存储空间和计算复杂度。稀疏编码的研究起源于1990年代,随着大数据时代的到来,稀疏编码技术的应用范围和影响力不断扩大。目前,稀疏编码已经成为计算...

EasyGBS国标流媒体服务器GB28181国标方案安装使用文档-程序员宅基地

文章浏览阅读217次。EasyGBS - GB28181 国标方案安装使用文档下载安装包下载,正式使用需商业授权, 功能一致在线演示在线API架构图EasySIPCMSSIP 中心信令服务, 单节点, 自带一个 Redis Server, 随 EasySIPCMS 自启动, 不需要手动运行EasySIPSMSSIP 流媒体服务, 根..._easygbs-windows-2.6.0-23042316使用文档

【Web】记录巅峰极客2023 BabyURL题目复现——Jackson原生链_原生jackson 反序列化链子-程序员宅基地

文章浏览阅读1.2k次,点赞27次,收藏7次。2023巅峰极客 BabyURL之前AliyunCTF Bypassit I这题考查了这样一条链子:其实就是Jackson的原生反序列化利用今天复现的这题也是大同小异,一起来整一下。_原生jackson 反序列化链子

一文搞懂SpringCloud,详解干货,做好笔记_spring cloud-程序员宅基地

文章浏览阅读734次,点赞9次,收藏7次。微服务架构简单的说就是将单体应用进一步拆分,拆分成更小的服务,每个服务都是一个可以独立运行的项目。这么多小服务,如何管理他们?(服务治理 注册中心[服务注册 发现 剔除])这么多小服务,他们之间如何通讯?这么多小服务,客户端怎么访问他们?(网关)这么多小服务,一旦出现问题了,应该如何自处理?(容错)这么多小服务,一旦出现问题了,应该如何排错?(链路追踪)对于上面的问题,是任何一个微服务设计者都不能绕过去的,因此大部分的微服务产品都针对每一个问题提供了相应的组件来解决它们。_spring cloud

Js实现图片点击切换与轮播-程序员宅基地

文章浏览阅读5.9k次,点赞6次,收藏20次。Js实现图片点击切换与轮播图片点击切换<!DOCTYPE html><html> <head> <meta charset="UTF-8"> <title></title> <script type="text/ja..._点击图片进行轮播图切换

tensorflow-gpu版本安装教程(过程详细)_tensorflow gpu版本安装-程序员宅基地

文章浏览阅读10w+次,点赞245次,收藏1.5k次。在开始安装前,如果你的电脑装过tensorflow,请先把他们卸载干净,包括依赖的包(tensorflow-estimator、tensorboard、tensorflow、keras-applications、keras-preprocessing),不然后续安装了tensorflow-gpu可能会出现找不到cuda的问题。cuda、cudnn。..._tensorflow gpu版本安装

随便推点

物联网时代 权限滥用漏洞的攻击及防御-程序员宅基地

文章浏览阅读243次。0x00 简介权限滥用漏洞一般归类于逻辑问题,是指服务端功能开放过多或权限限制不严格,导致攻击者可以通过直接或间接调用的方式达到攻击效果。随着物联网时代的到来,这种漏洞已经屡见不鲜,各种漏洞组合利用也是千奇百怪、五花八门,这里总结漏洞是为了更好地应对和预防,如有不妥之处还请业内人士多多指教。0x01 背景2014年4月,在比特币飞涨的时代某网站曾经..._使用物联网漏洞的使用者

Visual Odometry and Depth Calculation--Epipolar Geometry--Direct Method--PnP_normalized plane coordinates-程序员宅基地

文章浏览阅读786次。A. Epipolar geometry and triangulationThe epipolar geometry mainly adopts the feature point method, such as SIFT, SURF and ORB, etc. to obtain the feature points corresponding to two frames of images. As shown in Figure 1, let the first image be and th_normalized plane coordinates

开放信息抽取(OIE)系统(三)-- 第二代开放信息抽取系统(人工规则, rule-based, 先抽取关系)_语义角色增强的关系抽取-程序员宅基地

文章浏览阅读708次,点赞2次,收藏3次。开放信息抽取(OIE)系统(三)-- 第二代开放信息抽取系统(人工规则, rule-based, 先关系再实体)一.第二代开放信息抽取系统背景 第一代开放信息抽取系统(Open Information Extraction, OIE, learning-based, 自学习, 先抽取实体)通常抽取大量冗余信息,为了消除这些冗余信息,诞生了第二代开放信息抽取系统。二.第二代开放信息抽取系统历史第二代开放信息抽取系统着眼于解决第一代系统的三大问题: 大量非信息性提取(即省略关键信息的提取)、_语义角色增强的关系抽取

10个顶尖响应式HTML5网页_html欢迎页面-程序员宅基地

文章浏览阅读1.1w次,点赞6次,收藏51次。快速完成网页设计,10个顶尖响应式HTML5网页模板助你一臂之力为了寻找一个优质的网页模板,网页设计师和开发者往往可能会花上大半天的时间。不过幸运的是,现在的网页设计师和开发人员已经开始共享HTML5,Bootstrap和CSS3中的免费网页模板资源。鉴于网站模板的灵活性和强大的功能,现在广大设计师和开发者对html5网站的实际需求日益增长。为了造福大众,Mockplus的小伙伴整理了2018年最..._html欢迎页面

计算机二级 考试科目,2018全国计算机等级考试调整,一、二级都增加了考试科目...-程序员宅基地

文章浏览阅读282次。原标题:2018全国计算机等级考试调整,一、二级都增加了考试科目全国计算机等级考试将于9月15-17日举行。在备考的最后冲刺阶段,小编为大家整理了今年新公布的全国计算机等级考试调整方案,希望对备考的小伙伴有所帮助,快随小编往下看吧!从2018年3月开始,全国计算机等级考试实施2018版考试大纲,并按新体系开考各个考试级别。具体调整内容如下:一、考试级别及科目1.一级新增“网络安全素质教育”科目(代..._计算机二级增报科目什么意思

conan简单使用_apt install conan-程序员宅基地

文章浏览阅读240次。conan简单使用。_apt install conan