DSS部署-完整版_dss只有两个服务 links-程序员宅基地

技术标签: linkis dss DataSphereStudio部署流程 大数据

文章目录

- DSS部署流程

-

- 第一部分、 背景

- 第二部分、准备虚拟机、环境初始化

- 第三部分、创建hadoop用户

- 第四部分、配置JDK

- 第五部分 Scala部署

- 第六部分、安装MySQL5.7.25

- 第七部分、安装python3

- 第八部分、nginx【dss会自动安装】

- 第九部分、安装hadoop(伪分布式)

- 第十部分、Hive安装部署

- 第十一部分、Spark on Yarn部署

- 第十二部分、DSS一键安装

- 第十三部分、帮助

- 准备kvm虚拟机

- 环境准备:基础软件安装[telnet,tar,sed,dos2unix,yum,zip,unzip,expect,net-tools,ping,curl,]

- 创建用户

- 配置JDK:JDK (1.8.0_141以上)

- 配置Scala:scala

- 安装MySQL:MySQL (5.5+)

- 安装Python:phthon2(如果用python3需要修改dss中的相关配置,建议使用python2)

- 安装nginx:nginx

- 安装hadoop2.7.2

- 安装hive2.3.3

- 安装spark2.0

- 安装准备[linkis,dss,dss-web],修改配置,修改数据库配置

- 执行安装脚本,安装步骤,是否安装成功,启动服务

DSS部署流程

GitHub :linjie_830914

本文主要用于指导用户进行 DataSphereStudio 的安装、部署,以便用户可以快速入手 和认识其核心功能。

第一部分、 背景

自主研发的大数据中台产品,用以帮助用户快速收集数据、整理数据、构建数仓、数据服务以及数据资产管理。其中涉及很多大数据组件,各个组件都有各自的API,导致开发者学习成本较高,也不易于维护。

故考虑部署学习DSS,基于DSS为客户提供服务。

第二部分、准备虚拟机、环境初始化

1、准备虚拟机

首先通过 qemu-img 创建虚拟磁盘文件

#qemu-img create -f qcow2 -o size=50G,preallocation=metadata CentOS7.qcow2

安装虚拟机命令:

#virt-install --name=kvmdss --virt-type=kvm --vcpus=4 --ram=10240 --location=/home/kvm/iso/CentOS-7.2-x86_64-Minimal-1511.iso --disk path=/home/kvm/img/kvmdss.img,size=50,format=qcow2 --network bridge=virbr0 --graphics=none --extra-args='console=ttyS0' --force

–name 虚拟机名

–memory 内存(默认单位: MB)

–disk 指定虚拟磁盘文件,format指定虚拟磁盘格式,bus 指定半虚拟化(virtio) cache 指定磁盘缓存(回写)

–network 执行网络,不指定网络是无法启动的。bridge 执行网桥设备 model 指定虚拟网卡为半虚拟化,优化性能

–graphics 通过什么方式访问界面,这里使用 vnc ,否则无法输入。

–noautoconsole 不用在界面直接弹出安装界面,后面可以通过 virt-view centos 唤出图形界面

查看此机器是否支持虚拟化:grep -i 'vmx\|svm' /proc/cpuinfo

vmx是英特尔CPU,svm是AMD的CPU

虚拟机操作

-

进入

virsh console kvmdss -

查看

virsh list --all -

启动

virsh start kvmdss -

重启

virsh reboot kvmdss -

暂停

virsh suspend kvmdss -

恢复暂停

virsh resume kvmdss -

关闭

virsh shutdown kvmdss -

强制停止

virsh destroy kvmdss -

开机启动指定的虚拟机:

virsh autostart feng01 -

取消开机启动:

virsh autostart --disable feng01 -

挂起虚拟机(相当于windows睡眠):

virsh suspend feng01 -

恢复挂起的虚拟机:

virsh resume feng01

克隆虚拟机:

克隆虚拟机前需要先关机,按feng01机器克隆feng02机器:

virt-clone --original feng01 --name feng02 --file /kvm_data/feng02.img

– original feng01 :克隆源

–name feng02 ;克隆机器的名字

–file /kvm_data/feng02.img : 文件放在那里

镜像操作

- 创建镜像

virsh snapshot-create-as kvmdss kvmdss-image - 查看镜像

virsh snapshot-lisk kvmdss - 删除镜像

virsh snapshot-delete kvmdss kvmdss-image - 恢复镜像

virsh snapshot-revert kvmdss-image - 查看当前快照版本:

virsh snapshot-current kvmdss

2、环境初始化

关闭防火墙

systemctl stop firewalld && systemctl disable firewalld

关闭selinux

sed -i 's/enforcing/disabled/' /etc/selinux/config # 永久

setenforce 0 # 临时

关闭swap

swapoff -a # 临时

sed -ri 's/.*swap.*/#&/' /etc/fstab # 永久

根据规划设置主机名

hostnamectl set-hostname <hostname>

在master添加hosts

cat >> /etc/hosts << EOF

192.168.100.61 k8s-master1

192.168.100.62 k8s-node1

192.168.100.63 k8s-node2

192.168.100.64 k8s-master2

EOF

将桥接的IPv4流量传递到iptables的链

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system # 生效

时间同步

yum install ntpdate -y && ntpdate time.windows.com

安装如下软件

需要zip,官网少一个zip包

yum install -y wget vim telnet tar sed dos2unix zip unzip expect net-tools ping curl

3、准备备如下软件包

jdk\sscala\mysql\python2\nginx\hadoop2.7.2\hive2.3.3\spark2.0

下载链接:https://pan.baidu.com/s/1ydHvk3jc_hAozbbQvBT2Wg,提取码:ojn9

https://blog.csdn.net/weixin_33955681/article/details/92958527)

第三部分、创建hadoop用户

1、创建一个名字为hadoop的普通用户

[root@bigdata-senior01 ~]# useradd hadoop

[root@bigdata-senior01 ~]# passwd hadoop

2、 给hadoop用户sudo权限

注意:如果root用户无权修改sudoers文件,先手动为root用户添加写权限。

[root@bigdata-senior01 ~]# chmod u+w /etc/sudoers

给hadoop用户sudo授权

[root@bigdata-senior01 ~]# vim /etc/sudoers

设置权限,学习环境可以将hadoop用户的权限设置的大一些,但是生产环境一定要注意普通用户的权限限制。

root ALL=(ALL) ALL

hadoop ALL=(root) NOPASSWD:ALL

3、 切换到hadoop用户

[root@bigdata-senior01 ~]# su - hadoop

[hadoop@bigdata-senior01 ~]$

4、 创建存放hadoop文件的目录

[hadoop@bigdata-senior01 ~]$ sudo mkdir /opt/{modules,data}

5、 将hadoop文件夹的所有者指定为hadoop用户

如果存放hadoop的目录的所有者不是hadoop,之后hadoop运行中可能会有权限问题,那么就讲所有者改为hadoop。

[hadoop@bigdata-senior01 ~]# sudo chown -R hadoop:hadoop /opt/modules

第四部分、配置JDK

参考资料

注意:Hadoop机器上的JDK,最好是Oracle的JavaJDK,不然会有一些问题,比如可能没有JPS命令。

如果安装了其他版本的JDK,卸载掉。

卸载原JDK

步骤一:查询系统是否以安装jdk

#rpm -qa|grep java

或

#rpm -qa|grep jdk

或

#rpm -qa|grep gcj

步骤二:卸载已安装的jdk

#rpm -e --nodeps java-1.8.0-openjdk-1.8.0.131-11.b12.el7.x86_64

#rpm -e --nodeps java-1.7.0-openjdk-1.7.0.141-2.6.10.5.el7.x86_64

#rpm -e --nodeps java-1.8.0-openjdk-headless-1.8.0.131-11.b12.el7.x86_64

#rpm -e --nodeps java-1.7.0-openjdk-headless-1.8.0.131-11.b12.el7.x86_64

步骤三:验证一下是还有jdk

#rpm -qa|grep java

#java -version

没有内容证明已经卸载干净了

实际操作记录如下

[root@localhost ~]# java -version

openjdk version "1.8.0_262"

OpenJDK Runtime Environment (build 1.8.0_262-b10)

OpenJDK 64-Bit Server VM (build 25.262-b10, mixed mode)

[root@localhost ~]# rpm -qa|grep jdk

java-1.8.0-openjdk-headless-1.8.0.262.b10-1.el7.x86_64

copy-jdk-configs-3.3-10.el7_5.noarch

java-1.7.0-openjdk-headless-1.7.0.261-2.6.22.2.el7_8.x86_64

java-1.8.0-openjdk-1.8.0.262.b10-1.el7.x86_64

java-1.7.0-openjdk-1.7.0.261-2.6.22.2.el7_8.x86_64

[root@localhost ~]#

[root@localhost ~]# rpm -e --nodeps java-1.8.0-openjdk-headless-1.8.0.262.b10-1.el7.x86_64 copy-jdk-configs-3.3-10.el7_5.noarch java-1.7.0-openjdk-headless-1.7.0.261-2.6.22.2.el7_8.x86_64 java-1.8.0-openjdk-1.8.0.262.b10-1.el7.x86_64 java-1.7.0-openjdk-1.7.0.261-2.6.22.2.el7_8.x86_64

[root@localhost ~]# rpm -qa|grep jdk

[root@localhost ~]#

安装新JDK

(1) 去下载Oracle版本Java JDK:jdk-8u261-linux-x64.tar.gz

Java Archive Downloads - Java SE 7 (oracle.com)

(2) 将jdk-7u67-linux-x64.tar.gz解压到/opt/modules目录下

mkdir -p /opt/modules

sudo tar -zxvf jdk-8u261-linux-x64.tar.gz -C /opt/modules

(3) 添加环境变量

设置JDK的环境变量 JAVA_HOME。需要修改配置文件/etc/profile,追加

sudo vim /etc/profile

export JAVA_HOME="/opt/modules/jdk1.8.0_261"

export PATH=$JAVA_HOME/bin:$PATH

修改完毕后,执行 source /etc/profile

(4)安装后再次执行 java –version,可以看见已经安装完成。

[root@localhost jdk1.8.0_261]# java -version

java version "1.8.0_261"

Java(TM) SE Runtime Environment (build 1.8.0_261-b12)

Java HotSpot(TM) 64-Bit Server VM (build 25.261-b12, mixed mode)

[root@localhost hadoop]#

第五部分 Scala部署

[hadoop@bigdata-senior01 modules]$ pwd

/opt/modules

[hadoop@bigdata-senior01 modules]$ tar xf scala-2.12.7.tgz

[hadoop@bigdata-senior01 modules]$ ll

total 1478576

drwxrwxr-x 10 hadoop hadoop 184 Jan 4 22:41 apache-hive-2.3.3

-rw-r--r-- 1 hadoop hadoop 232229830 Jan 4 21:41 apache-hive-2.3.3-bin.tar.gz

drwxr-xr-x 10 hadoop hadoop 182 Jan 4 22:14 hadoop-2.8.5

-rw-r--r-- 1 hadoop hadoop 246543928 Jan 4 21:41 hadoop-2.8.5.tar.gz

drwxr-xr-x 8 hadoop hadoop 273 Jun 17 2020 jdk1.8.0_261

-rw-r--r-- 1 hadoop hadoop 143111803 Jan 4 21:41 jdk-8u261-linux-x64.tar.gz

drwxr-xr-x 2 root root 6 Jan 4 22:29 mysql-5.7.25-linux-glibc2.12-x86_64

-rw-r--r-- 1 root root 644862820 Jan 4 22:27 mysql-5.7.25-linux-glibc2.12-x86_64.tar.gz

-rw-r--r-- 1 hadoop hadoop 1006904 Jan 4 21:41 mysql-connector-java-5.1.49.jar

drwxrwxr-x 6 hadoop hadoop 50 Sep 27 2018 scala-2.12.7

-rw-r--r-- 1 hadoop hadoop 20415505 Jan 4 21:41 scala-2.12.7.tgz

-rw-r--r-- 1 hadoop hadoop 225875602 Jan 4 21:41 spark-2.3.2-bin-hadoop2.7.tgz

[hadoop@bigdata-senior01 scala-2.12.7]$ pwd

/opt/modules/scala-2.12.7

[hadoop@bigdata-senior01 scala-2.12.7]$

[hadoop@bigdata-senior01 modules]$vim /etc/profile

export SCALA_HOME="/opt/modules/scala-2.12.7"

export PATH=$SCALA_HOME/bin:$PATH

[hadoop@bigdata-senior01 scala-2.12.7]$ sudo vim /etc/profile

[hadoop@bigdata-senior01 scala-2.12.7]$ source /etc/profile

[hadoop@bigdata-senior01 scala-2.12.7]$ echo $SCALA_HOME

/opt/modules/scala-2.12.7

[hadoop@bigdata-senior01 scala-2.12.7]$ scala

Welcome to Scala 2.12.7 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_261).

Type in expressions for evaluation. Or try :help.

scala>

https://blog.csdn.net/weixin_33955681/article/details/92958527)

第六部分、安装MySQL5.7.25

1、删除centos系统自带的mariadb数据库防止发生冲突

rpm -qa|grep mariadb

rpm -e mariadb-libs --nodeps

2、安装libaio库

yum -y install libaio

3、下载并解压mysql-5.7.25

cd /opt/modules/

wget https://dev.mysql.com/get/Downloads/MySQL-5.7/mysql-5.7.25-linux-glibc2.12-x86_64.tar.gz

tar xzvf mysql-5.7.25-linux-glibc2.12-x86_64.tar.gz

4、查看是否有mysql用户和mysql用户组

cat /etc/passwd|grep mysql

cat /etc/group|grep mysql# 如果存在,则删除用户和用户组userdel -r mysql

5、创建mysql用户及其用户组

groupadd mysql

useradd -r -g mysql mysql

6、设置mysql用户为非登陆用户

usermod -s /sbin/nologin mysql

7、创建basedir、datadir目录、pid文件

mkdir /opt/mysql

mkdir /opt/mysql/data

mv mysql-5.7.25-linux-glibc2.12-x86_64/* /opt/mysql/

touch /opt/mysql/mysqld.pid

chown -R mysql:mysql /opt/mysql

8、创建日志

touch /var/log/mysqld.log

chown mysql:mysql /var/log/mysqld.log

9、创建socket文件

touch /tmp/mysql.sock

chown mysql:mysql /tmp/mysql.sock

10、创建配置文件vim /etc/my.cnf并加入如下内容

[mysqld]

character-set-server=utf8

user=mysql

port=3306

basedir=/opt/mysql

datadir=/opt/mysql/data

socket=/tmp/mysql.sock

[mysqld_safe]

log-error=/var/log/mysqld.log

pid-file=/opt/mysql/mysqld.pid

[client]

port=3306

socket=/tmp/mysql.sock

11、安装初始化

cd /opt/mysql/bin/

./mysqld --defaults-file=/etc/my.cnf --initialize --user=mysql

成功即为如下图所示,记录临时密码。

[root@bigdata-senior01 modules]# mv mysql-5.7.25-linux-glibc2.12-x86_64/* /opt/mysql/

[root@bigdata-senior01 modules]# touch /opt/mysql/mysqld.pid

[root@bigdata-senior01 modules]# chown -R mysql:mysql /opt/mysql

[root@bigdata-senior01 modules]# touch /var/log/mysqld.log

[root@bigdata-senior01 modules]# chown mysql:mysql /var/log/mysqld.log

[root@bigdata-senior01 modules]# touch /tmp/mysql.sock

[root@bigdata-senior01 modules]# chown mysql:mysql /tmp/mysql.sock

[root@bigdata-senior01 modules]# vim /etc/my.cnf

[root@bigdata-senior01 modules]# cd /opt/mysql/bin/

[root@bigdata-senior01 bin]# ./mysqld --defaults-file=/etc/my.cnf --initialize --user=mysql

2022-01-05T06:30:34.747800Z 0 [Warning] TIMESTAMP with implicit DEFAULT value is deprecated. Please use --explicit_defaults_for_timestamp server option (see documentation for more details).

2022-01-05T06:30:35.045935Z 0 [Warning] InnoDB: New log files created, LSN=45790

2022-01-05T06:30:35.085211Z 0 [Warning] InnoDB: Creating foreign key constraint system tables.

2022-01-05T06:30:35.167573Z 0 [Warning] No existing UUID has been found, so we assume that this is the first time that this server has been started. Generating a new UUID: fd58c915-6df0-11ec-96b4-000c297b38d9.

2022-01-05T06:30:35.179666Z 0 [Warning] Gtid table is not ready to be used. Table 'mysql.gtid_executed' cannot be opened.

2022-01-05T06:30:35.185087Z 1 [Note] A temporary password is generated for root@localhost: &:yE0ZgoexP1

[root@bigdata-senior01 bin]#

临时密码:&:yE0ZgoexP1

kvm的虚拟机密码:

Lkp>amgj>3Ys

12、设置开机启动

复制启动脚本到资源目录:

cp ../support-files/mysql.server /etc/rc.d/init.d/mysqld

增加mysqld控制脚本权限:

chmod +x /etc/rc.d/init.d/mysqld

将mysqld加入到系统服务:

chkconfig --add mysqld

检查mysqld服务是否生效:

chkconfig --list mysqld

命令输出类似如下:

[root@hadoop bin]# ll ../support-files/mysql.server

-rwxr-xr-x 1 mysql mysql 10576 Dec 21 2018 ../support-files/mysql.server

[root@hadoop bin]# cp ../support-files/mysql.server /etc/rc.d/init.d/mysqld

[root@hadoop bin]# chmod +x /etc/rc.d/init.d/mysqld

[root@hadoop bin]# chkconfig --add mysqld

[root@hadoop bin]# chkconfig --list mysqld

Note: This output shows SysV services only and does not include native

systemd services. SysV configuration data might be overridden by native

systemd configuration.

If you want to list systemd services use 'systemctl list-unit-files'.

To see services enabled on particular target use

'systemctl list-dependencies [target]'.

mysqld 0:off 1:off 2:on 3:on 4:on 5:on 6:off

[root@hadoop bin]#

现在即可使用service命令控制mysql启动、停止。

PS:删除启动命令:

chkconfig --del mysqld

13、启动mysqld服务

service mysqld start

[root@hadoop bin]# chkconfig --del mysqld

[root@hadoop bin]# service mysqld start

Starting MySQL. SUCCESS!

[root@hadoop bin]#

14、环境变量配置

编辑/etc/profile,加入如下内容:

export PATH=$PATH:/opt/mysql/bin

执行命令使其生效:

source /etc/profile

15、登录mysql(使用随机生成的那个密码)

mysql -uroot -p'Lkp>amgj>3Ys'

修改root密码:

mysql> alter user "root"@"localhost" identified by "abcd123";

刷新权限:

mysql> flush privileges;

退出mysql,使用新密码登录mysql。

[root@hadoop bin]# vim /etc/profile

[root@hadoop bin]# source /etc/profile

[root@hadoop bin]# mysql -uroot -p

Enter password:

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 5

Server version: 5.7.25

Copyright (c) 2000, 2019, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> alter user "root"@"localhost" identified by "abcd123";

Query OK, 0 rows affected (0.00 sec)

mysql> flush privileges;

Query OK, 0 rows affected (0.00 sec)

mysql> exit

Bye

[root@hadoop bin]# mysql -uroot -pabcd123

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 6

Server version: 5.7.25 MySQL Community Server (GPL)

Copyright (c) 2000, 2019, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql>

16、添加远程登录用户

默认只允许 root 帐户在本地登录mysql,如果要在其它机器上连接MySQL,必须修改 root 允许远程连接,或者添加一个允许远程连接的帐户,为了安全起见,可以添加一个新的帐户。

mysql> grant all privileges on *.* to "root"@"%" identified by "abcd123" with grant option;

17、开启防火墙mysql3306端口的外部访问

firewall-cmd --zone=public --add-port=3306/tcp --permanent

firewall-cmd --reload

参数说明:

–zone:作用域,网络区域定义了网络连接的可信等级。

–add-port:添加端口与通信协议,格式:端口/通信协议,协议为tcp或udp。

–permanent:永久生效,没有此参数系统重启后端口访问失败。

18、重新启动mysql

[root@test ~]# systemctl restart mysqld.service

19、常见错误

1 mysql5.7初始化密码报错 ERROR 1820 (HY000): You must reset your password using ALTER USER statement before

2 修改mysql密码出现报错:ERROR 1064 (42000): You have an error in your SQL syntax; check the manual that corres

3 ERROR 1820 (HY000): You must reset your password using ALTER USER statement before executing this st

4 centos mysql 端口_Linux CentOS Mysql修改默认端口

5 mysql 5.7安全策略设置 报错ERROR 1193 (HY000): Unknown system variable 'validate_password

6 CentOS 7下启动、关闭、重启、查看MySQL服务

7 centos7 安装MySQL7 并更改初始化密码

在Linux上安装Python3 - lemon锋 - 博客园 (cnblogs.com)

第七部分、安装python3

dss默认使用python2,如果用python3需要该配置,建议默认使用python3,安装方法与python3类似

下载pip(适用于python3)

curl https://bootstrap.pypa.io/get-pip.py -o get-pip.py

下载pip(适用于python2)

curl https://bootstrap.pypa.io/pip/2.7/get-pip.py -o get-pip.py

安装pip

python get-pip.py

升级pip

pip install --upgrade pip

安装matplotlib

python -m pip install -i https://pypi.tuna.tsinghua.edu.cn/simple matplotlib

pip install matplotlib

安装依赖环境

输入命令:

# 安装依赖环境

yum -y install zlib-devel bzip2-devel openssl-devel ncurses-devel sqlite-devel readline-devel tk-devel gdbm-devel db4-devel libpcap-devel xz-devel

# 下载python包

wget https://www.python.org/ftp/python/3.7.1/Python-3.7.1.tgz

tar -zxvf Python-3.7.1.tgz

yum install gcc

# 3.7版本之后需要一个新的包libffi-devel

yum install libffi-devel -y

cd Python-3.7.1

./configure --prefix=/usr/local/python3

编译:

make

编译成功后,编译安装:

make install

检查python3.7的编译器:

/usr/local/python3/bin/python3.7

建立Python3和pip3的软链:

ln -s /usr/local/python3/bin/python3 /usr/bin/python3

ln -s /usr/local/python3/bin/pip3 /usr/bin/pip3

# 会导致yum报错,详见本文最后

并将/usr/local/python3/bin加入PATH

(1)vim /etc/profile

(2)按“I”,然后贴上下面内容:

# vim ~/.bash_profile

# .bash_profile

# Get the aliases and functions

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

# User specific environment and startup programs

PATH=$PATH:$HOME/bin:/usr/local/python3/bin

export PATH

source ~/.bash_profile

检查Python3及pip3是否正常可用:

python3 -V

pip3 -V

python2的处理

yum -y install epel-release

yum -y install python-pip

第八部分、nginx【dss会自动安装】

文章转自:https://www.cnblogs.com/liujuncm5/p/6713784.html

安装所需环境

Nginx 是 C语言 开发,建议在 Linux 上运行,当然,也可以安装 Windows 版本,本篇则使用 CentOS 7 作为安装环境。

一. gcc 安装

安装 nginx 需要先将官网下载的源码进行编译,编译依赖 gcc 环境,如果没有 gcc 环境,则需要安装:

yum install -y gcc-c++

二. PCRE pcre-devel 安装

PCRE(Perl Compatible Regular Expressions) 是一个Perl库,包括 perl 兼容的正则表达式库。nginx 的 http 模块使用 pcre 来解析正则表达式,所以需要在 linux 上安装 pcre 库,pcre-devel 是使用 pcre 开发的一个二次开发库。nginx也需要此库。命令:

yum install -y pcre pcre-devel

三. zlib 安装

zlib 库提供了很多种压缩和解压缩的方式, nginx 使用 zlib 对 http 包的内容进行 gzip ,所以需要在 Centos 上安装 zlib 库。

yum install -y zlib zlib-devel

四. OpenSSL 安装

OpenSSL 是一个强大的安全套接字层密码库,囊括主要的密码算法、常用的密钥和证书封装管理功能及 SSL 协议,并提供丰富的应用程序供测试或其它目的使用。

nginx 不仅支持 http 协议,还支持 https(即在ssl协议上传输http),所以需要在 Centos 安装 OpenSSL 库。

yum install -y openssl openssl-devel

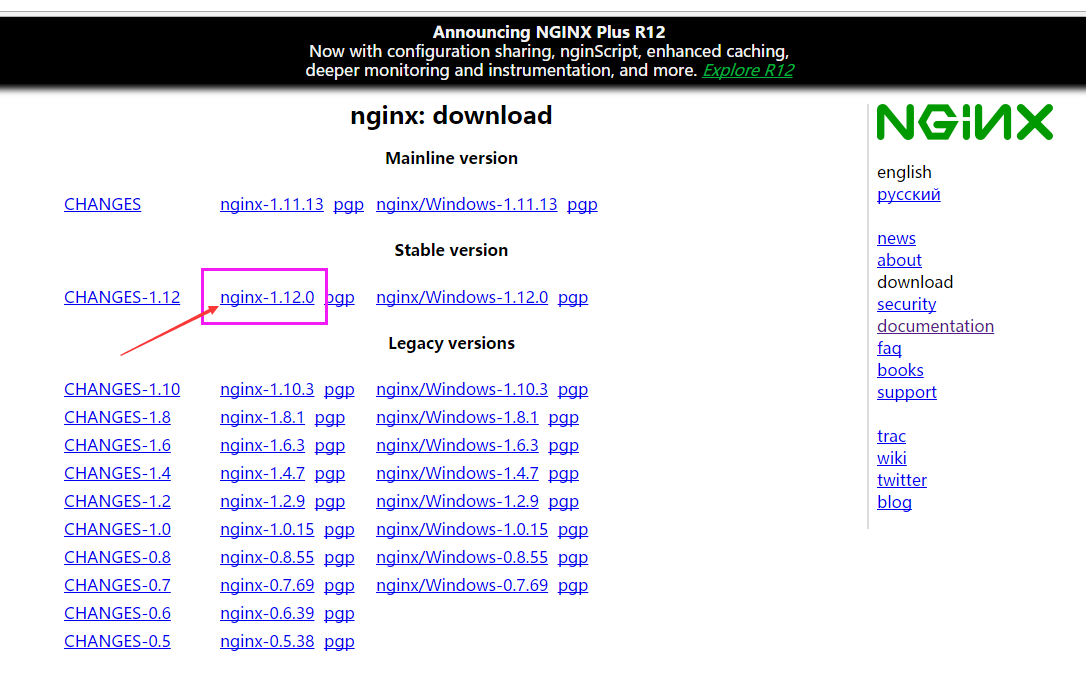

官网下载

1.直接下载.tar.gz安装包,地址:https://nginx.org/en/download.html

2.使用wget命令下载(推荐)。确保系统已经安装了wget,如果没有安装,执行 yum install wget 安装。

wget -c https://nginx.org/download/nginx-1.21.6.tar.gz

我下载的是1.12.0版本,这个是目前的稳定版。

解压

依然是直接命令:

tar -zxvf nginx-1.21.6.tar.gz

cd /opt/modules/nginx-1.21.6

配置

其实在 nginx-1.12.0 版本中你就不需要去配置相关东西,默认就可以了。当然,如果你要自己配置目录也是可以的。

1.使用默认配置

./configure

2.自定义配置(不推荐)

./configure \

--prefix=/usr/local/nginx \

--conf-path=/usr/local/nginx/conf/nginx.conf \

--pid-path=/usr/local/nginx/conf/nginx.pid \

--lock-path=/var/lock/nginx.lock \

--error-log-path=/var/log/nginx/error.log \

--http-log-path=/var/log/nginx/access.log \

--with-http_gzip_static_module \

--http-client-body-temp-path=/var/temp/nginx/client \

--http-proxy-temp-path=/var/temp/nginx/proxy \

--http-fastcgi-temp-path=/var/temp/nginx/fastcgi \

--http-uwsgi-temp-path=/var/temp/nginx/uwsgi \

--http-scgi-temp-path=/var/temp/nginx/scgi

注:将临时文件目录指定为/var/temp/nginx,需要在/var下创建temp及nginx目录

编译安装

make

make install

查找安装路径:

whereis nginx

启动、停止nginx

cd /usr/local/nginx/sbin/

./nginx

./nginx -s stop

./nginx -s quit

./nginx -s reload

启动时报80端口被占用:

nginx: [emerg] bind() to 0.0.0.0:80 failed (98: Address already in use)

解决办法:1、安装net-tool 包:

yum install net-tools

./nginx -s quit:此方式停止步骤是待nginx进程处理任务完毕进行停止。

./nginx -s stop:此方式相当于先查出nginx进程id再使用kill命令强制杀掉进程。

查询nginx进程:

ps aux|grep nginx

重启 nginx

1.先停止再启动(推荐):

对 nginx 进行重启相当于先停止再启动,即先执行停止命令再执行启动命令。如下:

./nginx -s quit

./nginx

2.重新加载配置文件:

当 ngin x的配置文件 nginx.conf 修改后,要想让配置生效需要重启 nginx,使用-s reload不用先停止 ngin x再启动 nginx 即可将配置信息在 nginx 中生效,如下:

./nginx -s reload

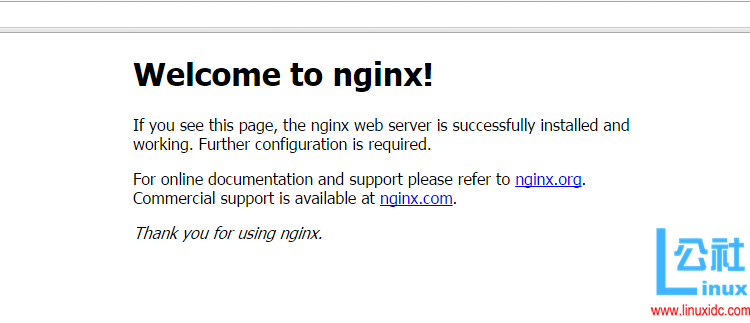

启动成功后,在浏览器可以看到这样的页面:

设置nginx的服务(dss所需)

vim /usr/lib/systemd/system/nginx.service

vim /usr/local/nginx/logs/nginx.pid

mkdir -p /etc/nginx/conf.d

nginx.service脚本如下:

[Unit]

Description=nginx - high performance web server

Documentation=http://nginx.org/en/docs/

After=network.target remote-fs.target nss-lookup.target

[Service]

Type=forking

PIDFile=/usr/local/nginx/logs/nginx.pid

ExecStartPre=/usr/local/nginx/sbin/nginx -t -c /usr/local/nginx/conf/nginx.conf

ExecStart=/usr/local/nginx/sbin/nginx -c /usr/local/nginx/conf/nginx.conf

ExecReload=/bin/kill -s HUP $MAINPID

ExecStop=/bin/kill -s QUIT $MAINPID

PrivateTmp=true

[Install]

WantedBy=multi-user.target

开始开机启动脚本如下:

systemctl enable nginx.service

systemctl start nginx.service

增加conf.d文件夹(dss所需)

创建 /etc/nginx/conf.d 文件夹

在原来文件/etc/nginx/nginx.conf 的http 块下加一句话就可以了:

include /etc/nginx/conf.d/*.conf;

增加nginx虚拟主机配置文件(conf.d) - 与f - 博客园 (cnblogs.com)

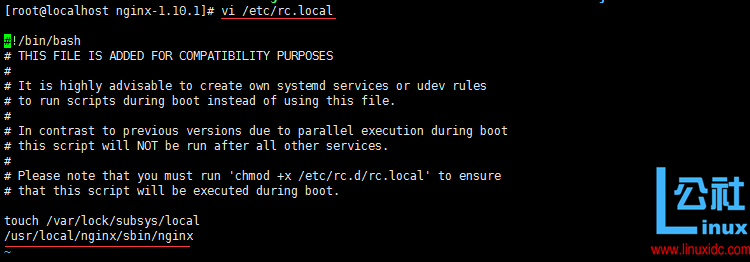

开机自启动

即在rc.local增加启动代码就可以了。

vi /etc/rc.local

增加一行 /usr/local/nginx/sbin/nginx

设置链接(安装dss用到):

ln -s /usr/local/nginx /etc/nginx

设置执行权限:

chmod 755 rc.local

到这里,nginx就安装完毕了,启动、停止、重启操作也都完成了,当然,你也可以添加为系统服务,我这里就不在演示了。

CentOS7安装Nginx - boonya - 博客园 (cnblogs.com)

--------------------------------------分割线 --------------------------------------

Nginx负载均衡配置实战 http://www.linuxidc.com/Linux/2014-12/110036.htm

CentOS 6.2实战部署Nginx+MySQL+PHP http://www.linuxidc.com/Linux/2013-09/90020.htm

使用Nginx搭建WEB服务器 http://www.linuxidc.com/Linux/2013-09/89768.htm

搭建基于Linux6.3+Nginx1.2+PHP5+MySQL5.5的Web服务器全过程 http://www.linuxidc.com/Linux/2013-09/89692.htm

CentOS 6.3下Nginx性能调优 http://www.linuxidc.com/Linux/2013-09/89656.htm

CentOS 6.3下配置Nginx加载ngx_pagespeed模块 http://www.linuxidc.com/Linux/2013-09/89657.htm

CentOS 6.4安装配置Nginx+Pcre+php-fpm http://www.linuxidc.com/Linux/2013-08/88984.htm

Nginx安装配置使用详细笔记 http://www.linuxidc.com/Linux/2014-07/104499.htm

Nginx日志过滤 使用ngx_log_if不记录特定日志 http://www.linuxidc.com/Linux/2014-07/104686.htm

--------------------------------------分割线 --------------------------------------

Nginx 的详细介绍:请点这里

Nginx 的下载地址:请点这里

彻底删除nginx

在开局配置Nginx时有可能会配置错误,报各种错误代码。看不懂或者懒得去看这个报错时,其实最简单的方式是卸载并重装咯。今天就带大家一起学习下,如何彻底卸载nginx程序。

卸载nginx程序的详细步骤

1、停止Nginx软件

/usr/local/nginx/sbin/nginx -s stop

如果不知道nginx安装路径,可以通过执行ps命令找到nginx程序的PID,然后kill其PID

2、查找根下所有名字包含nginx的文件

find / -name nginx

3、执行命令 rm -rf *删除nignx安装的相关文件

说明:全局查找往往会查出很多相关文件,但是前缀基本都是相同,后面不同的部分可以用*代替,以便快速删除~

[root@qll251 ~]# rm -rf /usr/local/sbin/nginx

[root@qll251 ~]# rm -rf /usr/local/nginx

[root@qll251 ~]# rm -rf /usr/src/nginx-1.11.1

[root@qll251 ~]# rm -rf /var/spool/mail/nginx

4、其他设置

如果设置了Nginx开机自启动的话,可能还需要下面两步

chkconfig nginx off

rm -rf /etc/init.d/nginx

删除之后,便可重新安装nginx了

————————————————

版权声明:本文为CSDN博主「开源Linux」的原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接及本声明。

原文链接:https://blog.csdn.net/weixin_38889300/article/details/106682750

第九部分、安装hadoop(伪分布式)

解压Hadoop目录文件

1、 复制hadoop-2.7.2.tar.gz到/opt/modules目录下。

2、 解压hadoop-2.7.2.tar.gz

[hadoop@bigdata-senior01 ~]# cd /opt/modules

[hadoop@bigdata-senior01 hadoop]# tar -zxvf hadoop-2.7.2.tar.gz

配置Hadoop

1、 配置Hadoop环境变量

[hadoop@bigdata-senior01 hadoop]# vim /etc/profile

追加配置:

export HADOOP_HOME="/opt/modules/hadoop-2.7.2"

export PATH=$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH

执行:source /etc/profile 使得配置生效

验证HADOOP_HOME参数:

[hadoop@bigdata-senior01 /]$ echo $HADOOP_HOME

/opt/modules/hadoop-2.7.2

2、 配置 hadoop-env.sh、mapred-env.sh、yarn-env.sh文件的JAVA_HOME参数

[hadoop@bigdata-senior01 ~]$ sudo vim ${HADOOP_HOME}/etc/hadoop/hadoop-env.sh

需要先配置jdk

cd /opt/modules

tar -zxvf jdk-7u67-linux-x64.tar.gz

修改JAVA_HOME参数为:

export JAVA_HOME="/opt/modules/jdk1.8.0_261"

export JAVA_HOME="/opt/modules/jdk1.7.0_79"

# The java implementation to use.

export JAVA_HOME=/opt/modules/jdk1.7.0_79

# Location of Hadoop.

export HADOOP_HOME=/opt/modules/hadoop-2.7.2

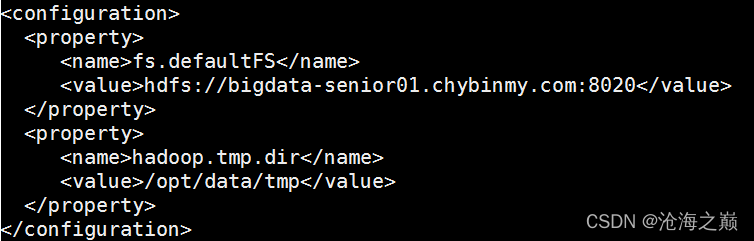

3、 配置core-site.xml

sudo vim ${HADOOP_HOME}/etc/hadoop/core-site.xml

(1)先永久修改hostname

想永久修改,应该修改配置文件 /etc/sysconfig/network。

命令:[root@bigdata-senior01 ~] vim /etc/sysconfig/network

打开文件后,

NETWORKING=yes #使用网络

HOSTNAME=dss #设置主机名

然后配置Host

命令:[root@bigdata-senior01 ~] vim /etc/hosts

添加hosts: 192.168.100.20 bigdata-senior01.chybinmy.com

再进行fs.defaultFS参数配置的是HDFS的地址。

<property>

<name>fs.defaultFS</name>

<value>hdfs://dss:8020</value>

</property>

(2) hadoop.tmp.dir配置的是Hadoop临时目录,比如HDFS的NameNode数据默认都存放这个目录下,查看*-default.xml等默认配置文件,就可以看到很多依赖 h a d o o p . t m p . d i r 的 配 置 。 默 认 的 h a d o o p . t m p . d i r 是 / t m p / h a d o o p − {hadoop.tmp.dir}的配置。 默认的hadoop.tmp.dir是/tmp/hadoop- hadoop.tmp.dir的配置。默认的hadoop.tmp.dir是/tmp/hadoop−{user.name},此时有个问题就是NameNode会将HDFS的元数据存储在这个/tmp目录下,如果操作系统重启了,系统会清空/tmp目录下的东西,导致NameNode元数据丢失,是个非常严重的问题,所有我们应该修改这个路径。

创建临时目录:

[hadoop@bigdata-senior01 hadoop-2.7.2]$ sudo mkdir -p /opt/data/tmp

将临时目录的所有者修改为hadoop

[hadoop@bigdata-senior01 hadoop-2.7.2]$ sudo chown hadoop:hadoop -R /opt/data/tmp

修改hadoop.tmp.dir

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/data/tmp</value>

</property>

配置、格式化、启动HDFS

1、 配置hdfs-site.xml

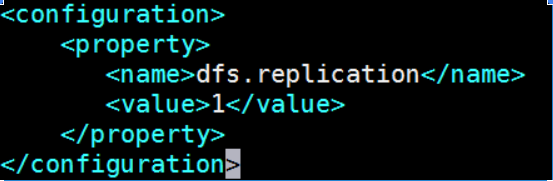

[hadoop@bigdata-senior01 hadoop-2.7.2]$ vim ${HADOOP_HOME}/etc/hadoop/hdfs-site.xml

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

dfs.replication配置的是HDFS存储时的备份数量,因为这里是伪分布式环境只有一个节点,所以这里设置为1。

2、 格式化HDFS

先编辑 ~/.bash_profile 配置文件,增加 Hadoop 相关用户环境变量,配置后就不需要到hadoop的路径下执行相关hdfs命令了

添加后bash_profile文件完整内容如下:

sudo vi ~/.bash_profile

export JAVA_HOME=/opt/modules/jdk1.8.0_261/

export JAVA_BIN=$JAVA_HOME/bin

export JAVA_LIB=$JAVA_HOME/lib

export CLASSPATH=.:$JAVA_LIB/tools.jar:$JAVA_LIB/dt.jar

export HADOOP_HOME=/opt/modules/hadoop-2.7.2/

PATH=$PATH:$HOME/.local/bin:$HOME/bin:$JAVA_BIN:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

export PATH

同样地,环境变量配置完之后,记得执行 source ~/.bash_profile 命令使环境变量生效。

[hadoop@bigdata-senior01 ~]$ hdfs namenode –format -force

格式化是对HDFS这个分布式文件系统中的DataNode进行分块,统计所有分块后的初始元数据的存储在NameNode中。

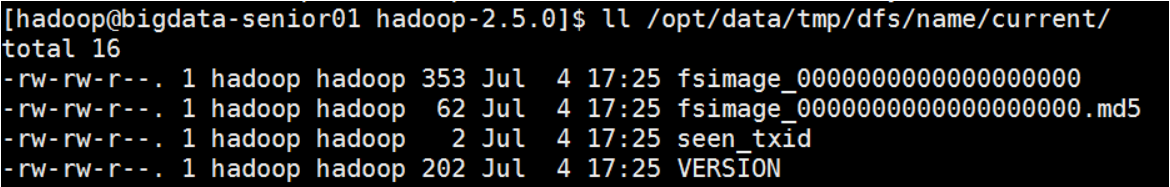

格式化后,查看core-site.xml里hadoop.tmp.dir(本例是/opt/data目录)指定的目录下是否有了dfs目录,如果有,说明格式化成功。

注意:

1.格式化时,这里注意hadoop.tmp.dir目录的权限问题,应该hadoop普通用户有读写权限才行,可以将/opt/data的所有者改为hadoop。

[hadoop@bigdata-senior01 hadoop-2.7.2]$ sudo chown -R hadoop:hadoop /opt/data

2.查看NameNode格式化后的目录。

[hadoop@bigdata-senior01 ~]$ ll /opt/data/tmp/dfs/name/current

fsimage是NameNode元数据在内存满了后,持久化保存到的文件。

fsimage*.md5 是校验文件,用于校验fsimage的完整性。

seen_txid 是hadoop的版本

vession文件里保存:

namespaceID:NameNode的唯一ID。

clusterID:集群ID,NameNode和DataNode的集群ID应该一致,表明是一个集群。

#Mon Jul 04 17:25:50 CST 2016

namespaceID=2101579007

clusterID=CID-205277e6-493b-4601-8e33-c09d1d23ece4

cTime=0

storageType=NAME_NODE

blockpoolID=BP-1641019026-127.0.0.1-1467624350057

layoutVersion=-57

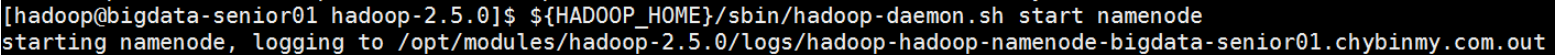

3、 启动NameNode

[hadoop@bigdata-senior01 hadoop-2.7.2]$ ${HADOOP_HOME}/sbin/hadoop-daemon.sh start namenode

starting namenode, logging to /opt/modules/hadoop-2.7.2/logs/hadoop-hadoop-namenode-bigdata-senior01.chybinmy.com.out

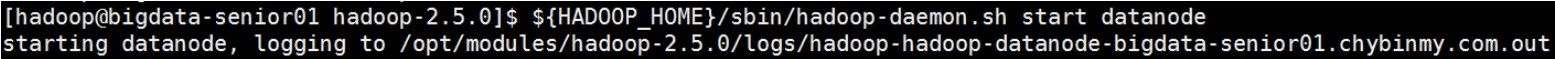

4、 启动DataNode

[hadoop@bigdata-senior01 hadoop-2.7.2]$ ${HADOOP_HOME}/sbin/hadoop-daemon.sh start datanode

starting datanode, logging to /opt/modules/hadoop-2.7.2/logs/hadoop-hadoop-datanode-bigdata-senior01.chybinmy.com.out

5、 启动SecondaryNameNode

[hadoop@bigdata-senior01 hadoop-2.7.2]$ ${HADOOP_HOME}/sbin/hadoop-daemon.sh start secondarynamenode

starting secondarynamenode, logging to /opt/modules/hadoop-2.7.2/logs/hadoop-hadoop-secondarynamenode-bigdata-senior01.chybinmy.com.out

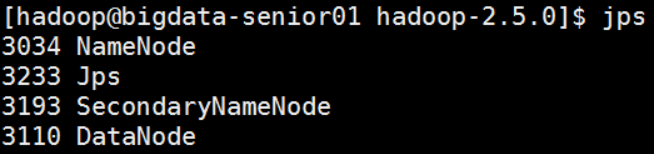

6、 JPS命令查看是否已经启动成功,有结果就是启动成功了。

[hadoop@bigdata-senior01 hadoop-2.7.2]$ jps

3034 NameNode

3233 Jps

3193 SecondaryNameNode

3110 DataNode

现在可以打开:localhost:50070,但是需要关闭selinux才能在本机打开vmware workstation中的服务

7、 HDFS上测试创建目录、上传、下载文件

HDFS上创建目录

[hadoop@bigdata-senior01 hadoop-2.7.2]$ ${HADOOP_HOME}/bin/hdfs dfs -mkdir /demo1

上传本地文件到HDFS上

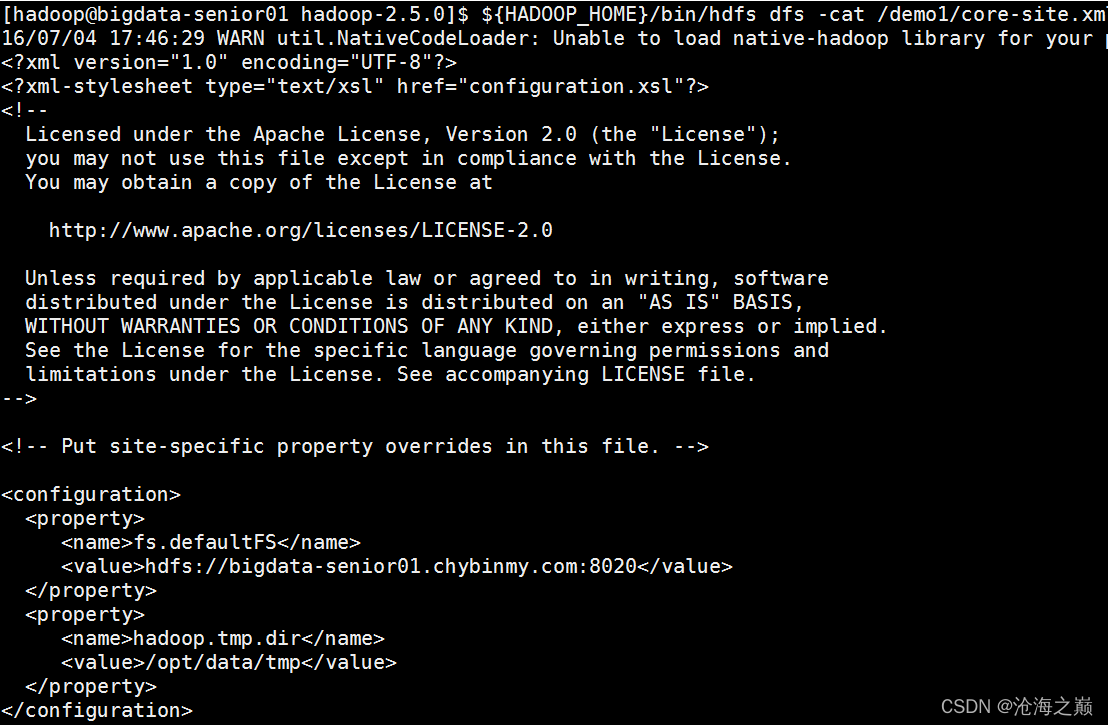

[hadoop@bigdata-senior01 hadoop-2.7.2]$ ${HADOOP_HOME}/bin/hdfs dfs -put ${HADOOP_HOME}/etc/hadoop/core-site.xml /demo1

读取HDFS上的文件内容

[hadoop@bigdata-senior01 hadoop-2.7.2]$ ${HADOOP_HOME}/bin/hdfs dfs -cat /demo1/core-site.xml

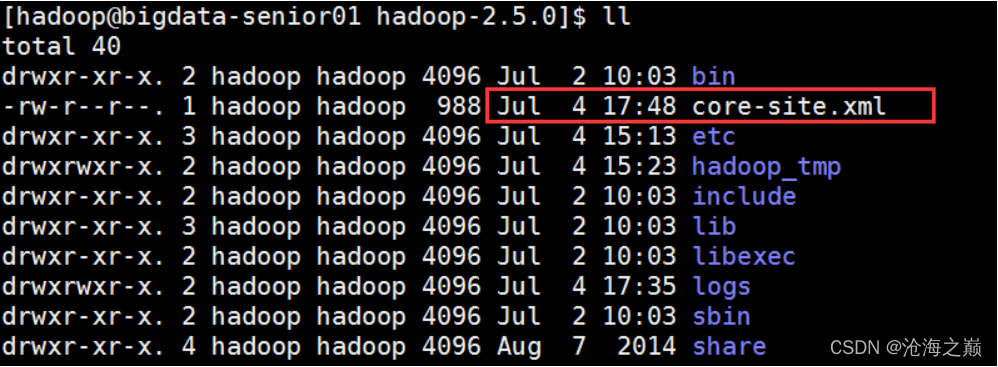

从HDFS上下载文件到本地

[hadoop@bigdata-senior01 hadoop-2.7.2]$ bin/hdfs dfs -get /demo1/core-site.xml

配置、启动YARN

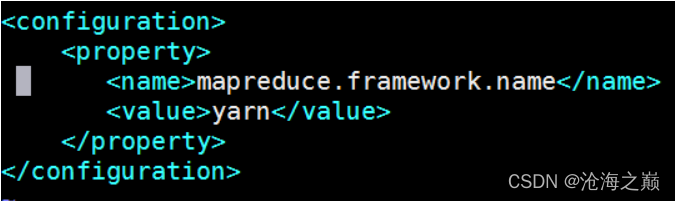

1、 配置mapred-site.xml

默认没有mapred-site.xml文件,但是有个mapred-site.xml.template配置模板文件。复制模板生成mapred-site.xml。

cd /opt/modules/hadoop-2.7.2

cp etc/hadoop/mapred-site.xml.template etc/hadoop/mapred-site.xml

添加配置如下:

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

指定mapreduce运行在yarn框架上。

2、 配置yarn-site.xml

添加配置如下:

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>dss</value>

</property>

yarn.nodemanager.aux-services配置了yarn的默认混洗方式,选择为mapreduce的默认混洗算法。

yarn.resourcemanager.hostname指定了Resourcemanager运行在哪个节点上。

3、 启动Resourcemanager

[hadoop@bigdata-senior01 hadoop-2.7.2]$ ${HADOOP_HOME}/sbin/yarn-daemon.sh start resourcemanager

4、 启动nodemanager

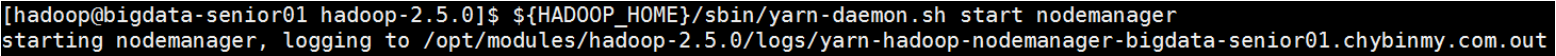

[hadoop@bigdata-senior01 hadoop-2.7.2]$ ${HADOOP_HOME}/sbin/yarn-daemon.sh start nodemanager

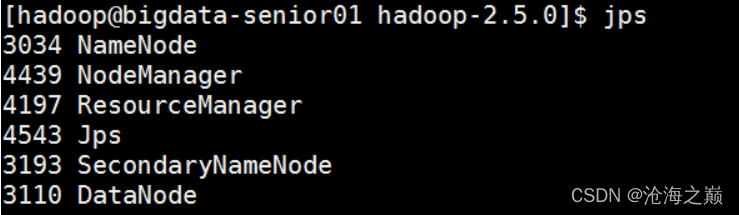

5、 查看是否启动成功

[hadoop@bigdata-senior01 hadoop-2.7.2]$ jps

3034 NameNode

4439 NodeManager

4197 ResourceManager

4543 Jps

3193 SecondaryNameNode

3110 DataNode

可以看到ResourceManager、NodeManager已经启动成功了。

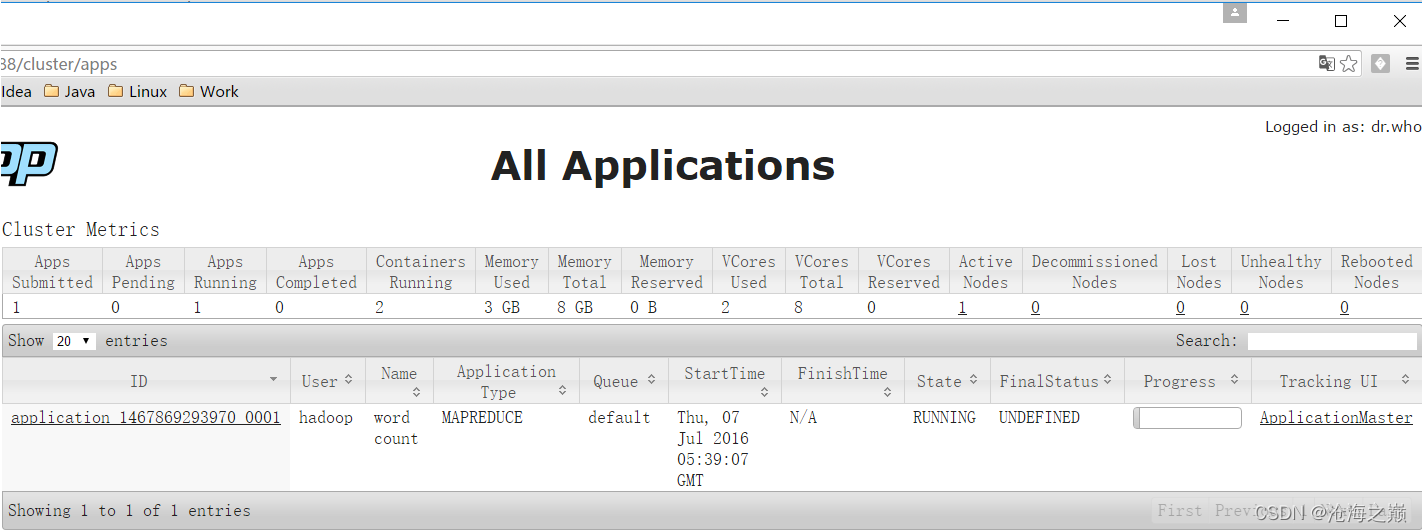

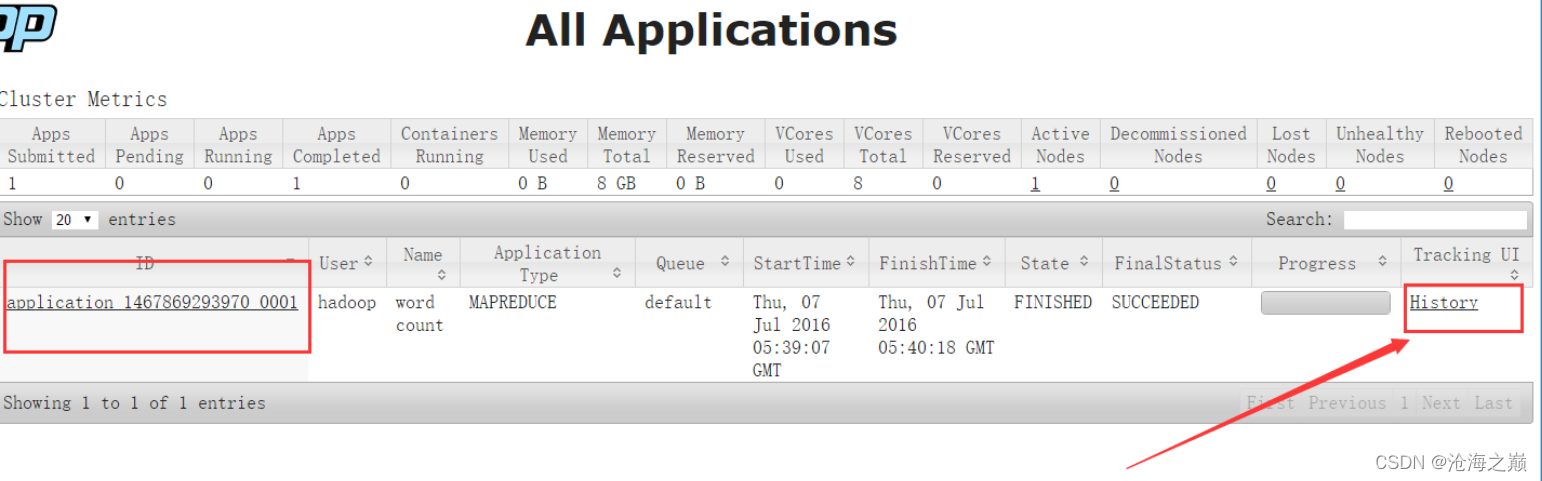

6、 YARN的Web页面

现在可以打开:localhost:8088,但是需要关闭selinux才能在本机打开vmware workstation中的服务

YARN的Web客户端端口号是8088,通过http://192.168.100.10:8088/可以查看。

运行MapReduce Job

在Hadoop的share目录里,自带了一些jar包,里面带有一些mapreduce实例小例子,位置在share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.2.jar,可以运行这些例子体验刚搭建好的Hadoop平台,我们这里来运行最经典的WordCount实例。

1、 创建测试用的Input文件

创建输入目录:

[hadoop@bigdata-senior01 hadoop-2.7.2]$ bin/hdfs dfs -mkdir -p /wordcountdemo/input

创建原始文件:

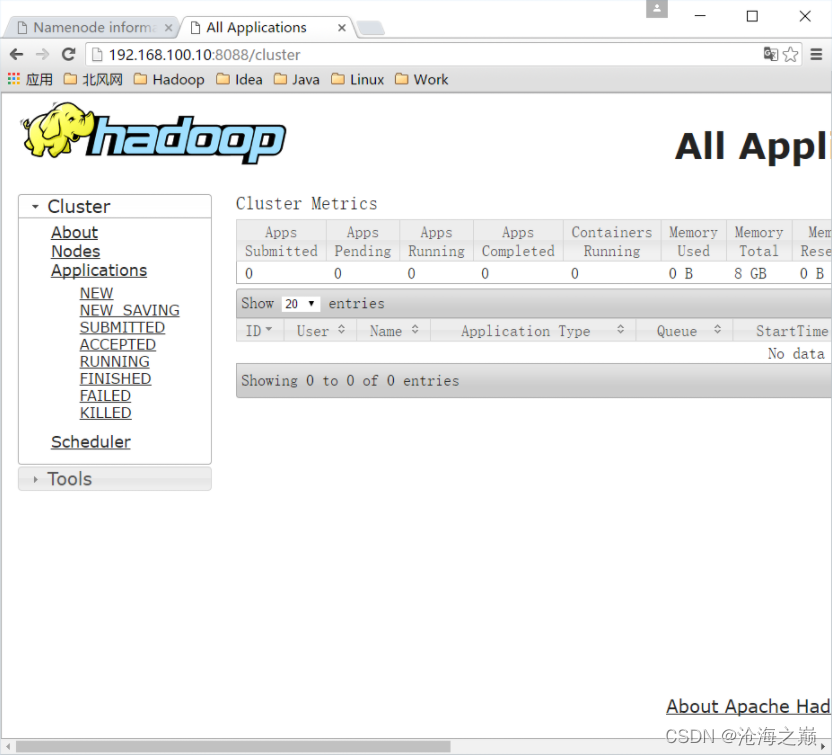

在本地/opt/data目录创建一个文件wc.input,内容如下。

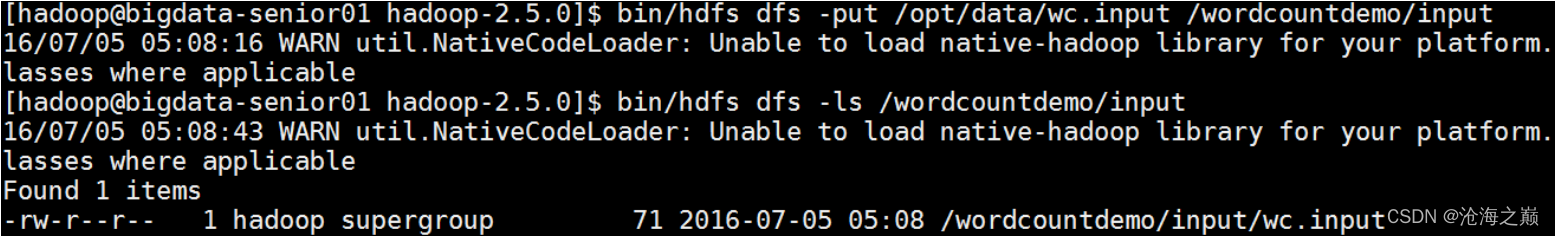

将wc.input文件上传到HDFS的/wordcountdemo/input目录中:

[hadoop@bigdata-senior01 hadoop-2.7.2]$ bin/hdfs dfs -put /opt/data/wc.input /wordcountdemo/input

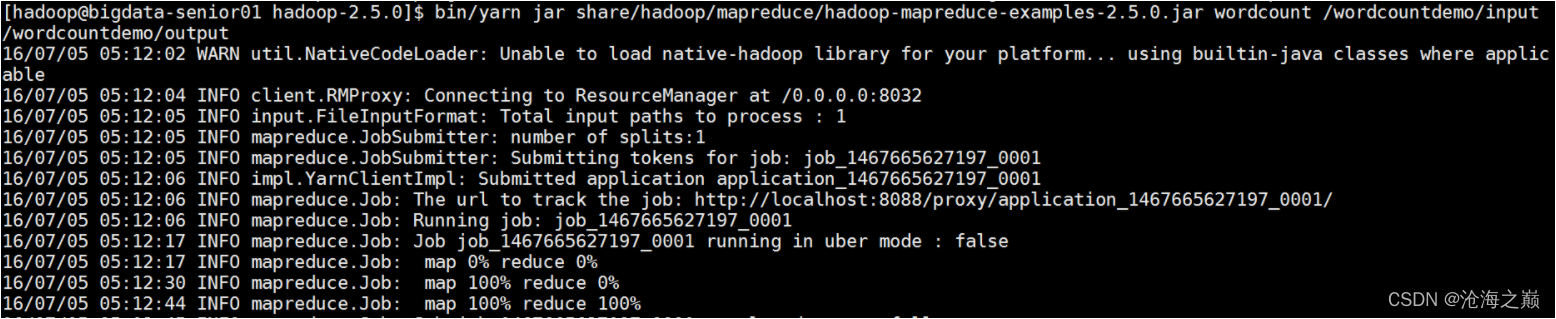

2、 运行WordCount MapReduce Job

[hadoop@bigdata-senior01 hadoop-2.7.2]$ bin/yarn jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.2.jar wordcount /wordcountdemo/input /wordcountdemo/output

[hadoop@localhost hadoop-2.7.2]$ bin/yarn jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.2.jar wordcount /wordcountdemo/input /wordcountdemo/output

22/01/04 22:20:05 INFO client.RMProxy: Connecting to ResourceManager at bigdata-senior01.chybinmy.com/192.168.100.20:8032

22/01/04 22:20:06 INFO input.FileInputFormat: Total input files to process : 1

22/01/04 22:20:07 INFO mapreduce.JobSubmitter: number of splits:1

22/01/04 22:20:07 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1641363392087_0001

22/01/04 22:20:08 INFO impl.YarnClientImpl: Submitted application application_1641363392087_0001

22/01/04 22:20:08 INFO mapreduce.Job: The url to track the job: http://bigdata-senior01.chybinmy.com:8088/proxy/application_1641363392087_0001/

22/01/04 22:20:08 INFO mapreduce.Job: Running job: job_1641363392087_0001

22/01/04 22:20:14 INFO mapreduce.Job: Job job_1641363392087_0001 running in uber mode : false

22/01/04 22:20:14 INFO mapreduce.Job: map 0% reduce 0%

22/01/04 22:20:19 INFO mapreduce.Job: map 100% reduce 0%

22/01/04 22:20:24 INFO mapreduce.Job: map 100% reduce 100%

22/01/04 22:20:25 INFO mapreduce.Job: Job job_1641363392087_0001 completed successfully

22/01/04 22:20:25 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=94

FILE: Number of bytes written=316115

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=206

HDFS: Number of bytes written=60

HDFS: Number of read operations=6

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=2534

Total time spent by all reduces in occupied slots (ms)=2356

Total time spent by all map tasks (ms)=2534

Total time spent by all reduce tasks (ms)=2356

Total vcore-milliseconds taken by all map tasks=2534

Total vcore-milliseconds taken by all reduce tasks=2356

Total megabyte-milliseconds taken by all map tasks=2594816

Total megabyte-milliseconds taken by all reduce tasks=2412544

Map-Reduce Framework

Map input records=4

Map output records=11

Map output bytes=115

Map output materialized bytes=94

Input split bytes=135

Combine input records=11

Combine output records=7

Reduce input groups=7

Reduce shuffle bytes=94

Reduce input records=7

Reduce output records=7

Spilled Records=14

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=121

CPU time spent (ms)=1100

Physical memory (bytes) snapshot=426364928

Virtual memory (bytes) snapshot=4207398912

Total committed heap usage (bytes)=298844160

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=71

File Output Format Counters

Bytes Written=60

[hadoop@localhost hadoop-2.7.2]$ bin/hdfs dfs -ls /wordcountdemo/output

Found 2 items

-rw-r--r-- 1 hadoop supergroup 0 2022-01-04 22:20 /wordcountdemo/output/_SUCCESS

-rw-r--r-- 1 hadoop supergroup 60 2022-01-04 22:20 /wordcountdemo/output/part-r-00000

[hadoop@localhost hadoop-2.7.2]$

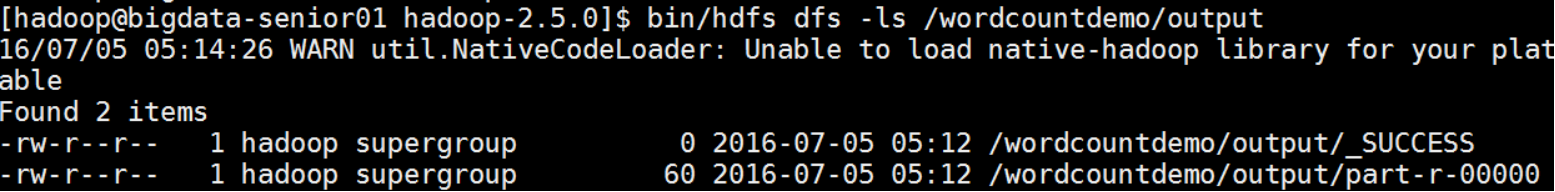

3、 查看输出结果目录

[hadoop@bigdata-senior01 hadoop-2.7.2]$ bin/hdfs dfs -ls /wordcountdemo/output

-rw-r--r-- 1 hadoop supergroup 0 2016-07-05 05:12 /wordcountdemo/output/_SUCCESS

-rw-r--r-- 1 hadoop supergroup 60 2016-07-05 05:12 /wordcountdemo/output/part-r-00000

output目录中有两个文件,_SUCCESS文件是空文件,有这个文件说明Job执行成功。

part-r-00000文件是结果文件,其中-r-说明这个文件是Reduce阶段产生的结果,mapreduce程序执行时,可以没有reduce阶段,但是肯定会有map阶段,如果没有reduce阶段这个地方有是-m-。

一个reduce会产生一个part-r-开头的文件。

查看输出文件内容。

[hadoop@bigdata-senior01 hadoop-2.7.2]$ bin/hdfs dfs -cat /wordcountdemo/output/part-r-00000

hadoop 3

hbase 1

hive 2

mapreduce 1

spark 2

sqoop 1

storm 1

结果是按照键值排好序的。

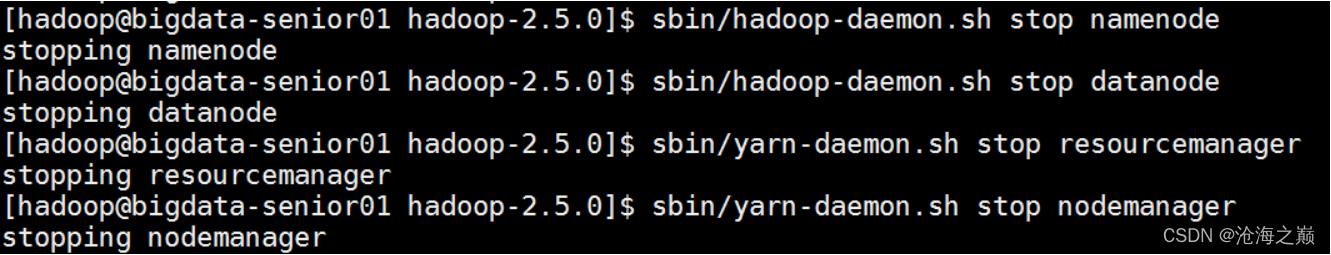

停止Hadoop

[hadoop@bigdata-senior01 hadoop-2.7.2]$ sbin/hadoop-daemon.sh stop namenode

stopping namenode

[hadoop@bigdata-senior01 hadoop-2.7.2]$ sbin/hadoop-daemon.sh stop datanode

stopping datanode

[hadoop@bigdata-senior01 hadoop-2.7.2]$ sbin/yarn-daemon.sh stop resourcemanager

stopping resourcemanager

[hadoop@bigdata-senior01 hadoop-2.7.2]$ sbin/yarn-daemon.sh stop nodemanager

stopping nodemanager

Hadoop各个功能模块的理解

1、 HDFS模块

HDFS负责大数据的存储,通过将大文件分块后进行分布式存储方式,突破了服务器硬盘大小的限制,解决了单台机器无法存储大文件的问题,HDFS是个相对独立的模块,可以为YARN提供服务,也可以为HBase等其他模块提供服务。

2、 YARN模块

YARN是一个通用的资源协同和任务调度框架,是为了解决Hadoop1.x中MapReduce里NameNode负载太大和其他问题而创建的一个框架。

YARN是个通用框架,不止可以运行MapReduce,还可以运行Spark、Storm等其他计算框架。

3、 MapReduce模块

MapReduce是一个计算框架,它给出了一种数据处理的方式,即通过Map阶段、Reduce阶段来分布式地流式处理数据。它只适用于大数据的离线处理,对实时性要求很高的应用不适用。

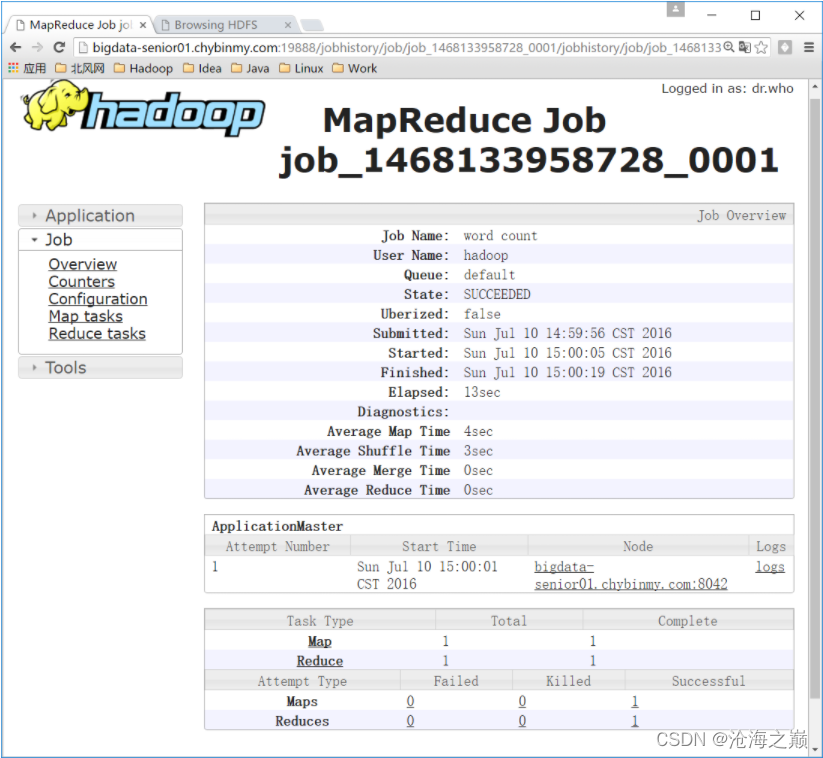

开启历史服务

历史服务介绍

Hadoop开启历史服务可以在web页面上查看Yarn上执行job情况的详细信息。可以通过历史服务器查看已经运行完的Mapreduce作业记录,比如用了多少个Map、用了多少个Reduce、作业提交时间、作业启动时间、作业完成时间等信息。

开启历史服务

[hadoop@bigdata-senior01 hadoop-2.7.2]$ sbin/mr-jobhistory-daemon.sh start historyserver

开启后,可以通过Web页面查看历史服务器:

http://bigdata-senior01.chybinmy.com:19888/

Web查看job执行历史

1、 运行一个mapreduce任务

[hadoop@bigdata-senior01 hadoop-2.7.2]$ bin/yarn jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.2.jar wordcount /wordcountdemo/input /wordcountdemo/output1

2、 job执行中

3、 查看job历史

历史服务器的Web端口默认是19888,可以查看Web界面。

但是在上面所显示的某一个Job任务页面的最下面,Map和Reduce个数的链接上,点击进入Map的详细信息页面,再查看某一个Map或者Reduce的详细日志是看不到的,是因为没有开启日志聚集服务。

开启日志聚集

4、 日志聚集介绍

MapReduce是在各个机器上运行的,在运行过程中产生的日志存在于各个机器上,为了能够统一查看各个机器的运行日志,将日志集中存放在HDFS上,这个过程就是日志聚集。

5、 开启日志聚集

配置日志聚集功能:

Hadoop默认是不启用日志聚集的。在yarn-site.xml文件里配置启用日志聚集。

<property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<property>

<name>yarn.log-aggregation.retain-seconds</name>

<value>106800</value>

</property>

yarn.log-aggregation-enable:是否启用日志聚集功能。

yarn.log-aggregation.retain-seconds:设置日志保留时间,单位是秒。

将配置文件分发到其他节点:

[hadoop@bigdata-senior01 hadoop]$ scp /opt/modules/hadoop-2.7.2/etc/hadoop/yarn-site.xml bigdata-senior02.chybinmy.com:/opt/modules/hadoop-2.7.2/etc/hadoop/

[hadoop@bigdata-senior01 hadoop]$ scp /opt/modules/hadoop-2.7.2/etc/hadoop/yarn-site.xml bigdata-senior03.chybinmy.com:/opt/modules/hadoop-2.7.2/etc/hadoop/

重启Yarn进程:

[hadoop@bigdata-senior01 hadoop-2.7.2]$ sbin/stop-yarn.sh

[hadoop@bigdata-senior01 hadoop-2.7.2]$ sbin/start-yarn.sh

重启HistoryServer进程:

[hadoop@bigdata-senior01 hadoop-2.7.2]$ sbin/mr-jobhistory-daemon.sh stop historyserver

[hadoop@bigdata-senior01 hadoop-2.7.2]$ sbin/mr-jobhistory-daemon.sh start historyserver

6、 测试日志聚集

运行一个demo MapReduce,使之产生日志:

bin/yarn jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.2.jar wordcount /input /output1

查看日志:

运行Job后,就可以在历史服务器Web页面查看各个Map和Reduce的日志了。

Hadoop(2.7.2,Hadoop其他版本需自行编译Linkis) ,安装的机器必须支持执行 hdfs dfs -ls / 命令

[hadoop@bigdata-senior01 ~]$ hdfs dfs -ls /

Found 3 items

drwxr-xr-x - hadoop supergroup 0 2022-01-04 22:14 /demo1

drwx------ - hadoop supergroup 0 2022-01-04 22:58 /tmp

drwxr-xr-x - hadoop supergroup 0 2022-01-04 22:22 /wordcountdemo

https://blog.csdn.net/weixin_33955681/article/details/92958527)

第十部分、Hive安装部署

安装部署Hive

wget http://archive.apache.org/dist/hive/hive-2.3.3/apache-hive-2.3.3-bin.tar.gz

tar -zxvf apache-hive-2.3.3-bin.tar.gz -C /opt/modules

//修改包名

$ mv apache-hive-2.3.3-bin apache-hive-2.3.3

==配置hive的环境变量(root用户)==

vi /etc/profile

export HIVE_HOME="/opt/modules/apache-hive-2.3.3"

export HIVE_CONF_DIR="/opt/modules/apache-hive-2.3.3/conf"

在 export PATH后面添加 H I V E H O M E / b i n : ‘ ‘ ‘ e x p o r t P A T H = HIVE_HOME/bin: ```export PATH= HIVEHOME/bin:‘‘‘exportPATH=HIVE_HOME/bin:$PATH```

————————————————

版权声明:本文为CSDN博主「数据的星辰大海」的原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接及本声明。

原文链接:https://blog.csdn.net/qq_37554565/article/details/90477492

配置hive-site.xml

- 使用hive-default.xml.template,创建hive-site.xml

//进入hive/conf文件夹下

$ cd apache-hive-2.3.3/conf/

//拷贝hive-default.xml.template ,重命名为 hive-site.xml

$ cp hive-default.xml.template hive-site.xml

————————————————

- 修改元数据数据库地址, javax.jdo.option.ConnectionURL;

//修改hive-site.xml配置

$ vi hive-site.xml

//1. 按 i 键,进入编辑状态

//2. 按 / 键,查找 javax.jdo.option.ConnectionURL

//修改如下

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://localhost:3306/hive?autoReconnect=true&useUnicode=true&createDatabaseIfNotExist=true&characterEncoding=utf8&useSSL=false&serverTimezone=UTC</value>

</property>

————————————————

3. 修改元数据数据库驱动,javax.jdo.option.ConnectionDriverName;

// 按 / 键,查找 javax.jdo.option.ConnectionDriverName

//修改如下

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

————————————————

4. 修改元数据数据库用户名,javax.jdo.option.ConnectionUserName;

//按 / 键,查找 javax.jdo.option.ConnectionUserName

//修改如下

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

————————————————

5. 元数据数据库登陆密码,javax.jdo.option.ConnectionPassword;

//按 / 键, 查找 javax.jdo.option.ConnectionPassword

//修改如下

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>abcd123</value>

</property>

————————————————

6. 修改hive数据仓库存储地址(在hdfs上具体存储地址),hive.metastore.warehouse.dir;

//按 / 键,查找 hive.metastore.warehouse.dir

//默认 /user/hive/warehouse ,这里不进行调整

//修改如下

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/user/hive/warehouse</value>

</property>

————————————————

7. 配置其他路径;

//1. 配置 hive.querylog.location

//修改如下

<property>

<name>hive.querylog.location</name>

<value>/opt/tmp/hive</value>

</property>

//2. 配置 hive.server2.logging.operation.log.location

//修改如下

<property>

<name>hive.server2.logging.operation.log.location</name>

<value>/opt/tmp/hive/operation_logs</value>

</property>

//3. 配置 hive.exec.local.scratchdir????

//修改如下

<property>

<name>hive.exec.local.scratchdir</name>

<value>/opt/tmp/hive</value>

</property>

//4. 配置 hive.downloaded.resources.dir

//修改如下

<property>

<name>hive.downloaded.resources.dir</name>

<value>/opt/tmp/_resources</value>

</property>

————————————————

配置hive-log4j2.properties错误日志

拷贝 hive-log4j2.properties.template ,并命名为 hive-log4j2.properties

//按 esc 键,退出编辑;

//按 wq 键,保存编辑;

//查看 /conf

$ ll

//拷贝 hive-log4j2.properties.template ,并命名为 hive-log4j2.properties

$ cp hive-log4j2.properties.template hive-log4j2.properties

//编辑 hive-log4j2.properties

$ vi hive-log4j2.properties

//按 i 键,进入编辑状态

//配置输出log文件

property.hive.log.dir = /opt/tmp/hive/operation_logs

————————————————

修改hive-log4j2.properties,配置hive的log

配置下面的参数(如果没有logs目录,在hive根目录下创建它

修改hive-env.sh

cp hive-env.sh.template hive-env.sh

因为Hive使用了 Hadoop, 需要在 hive-env.sh 文件中指定 Hadoop 安装路径:

vim hive-env.sh

在打开的配置文件中,添加如下几行:

export JAVA_HOME=/opt/modules/jdk1.8.0_261/

export HADOOP_HOME=/opt/modules/hadoop-2.7.2/

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

export HIVE_HOME=/opt/modules/apache-hive-2.3.3

export HIVE_CONF_DIR=$HIVE_HOME/conf

export HIVE_AUX_JARS_PATH=$HIVE_HOME/lib

初始化hive元数据

- 初始化schema,schematool -dbType mysql -initSchema;

//使用 schematool, 初始化 schema

[hadoop@bigdata-senior01 apache-hive-2.3.3]$ pwd

/opt/modules/apache-hive-2.3.3

[hadoop@bigdata-senior01 modules]$ cp mysql-connector-java-5.1.49.jar apache-hive-2.3.3/lib/

[hadoop@bigdata-senior01 modules]$ ll apache-hive-2.3.3/lib/mysql*

-rw-r--r-- 1 hadoop hadoop 1006904 Jan 4 22:53 apache-hive-2.3.3/lib/mysql-connector-java-5.1.49.jar

-rw-r--r-- 1 hadoop hadoop 7954 Dec 19 2016 apache-hive-2.3.3/lib/mysql-metadata-storage-0.9.2.jar

[hadoop@bigdata-senior01 modules]$

[hadoop@bigdata-senior01 apache-hive-2.3.3]$ bin/schematool -dbType mysql -initSchema

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/modules/apache-hive-2.3.3/lib/log4j-slf4j-impl-2.6.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/modules/hadoop-2.8.5/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Metastore connection URL: jdbc:mysql://localhost:3306/hive?autoReconnect=true&useUnicode=true&createDatabaseIfNotExist=true&characterEncoding=utf8&useSSL=false&serverTimezone=UTC

Metastore Connection Driver : com.mysql.jdbc.Driver

Metastore connection User: root

Starting metastore schema initialization to 2.3.0

Initialization script hive-schema-2.3.0.mysql.sql

Initialization script completed

schemaTool completed

[hadoop@bigdata-senior01 apache-hive-2.3.3]$

[hadoop@hadoop000 apache-hive-2.3.3]$

处理Driver异常

-

- 补充初始化 schema 时,会出现Underlying cause: java.lang.ClassNotFoundException: com.mysql.jdbc.Driver异常,这里是因为缺少mysql连接驱动,这里使用 mysql-connector-java 5.1.38;

//下载mysql-connector的jar包

cd /opt/modules/apache-hive-2.3.3/lib

wget

[hadoop@hadoop000 lib]$ wget http://central.maven.org/maven2/mysql/mysql-connector-java/5.1.38/mysql-connector-java-5.1.38.

//检查mysql驱动是否下载完成

[hadoop@localhost lib]$ ll | grep mysql

-rw-rw-r--. 1 hadoop hadoop 983911 Dec 16 2015 mysql-connector-java-5.1.38.jar

-rw-r--r--. 1 hadoop hadoop 7954 Dec 20 2016 mysql-metadata-storage-0.9.2.jar

再次执行初始化,出现 schemaTool completed ,说明初始化成功;

————————————————

3. 登录mysql,查看初始化后的hive库

//登录mysql

# mysql -uroot -pabcd123

//查看数据库

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| hive |

| mysql |

| performance_schema |

| sys |

+--------------------+

5 rows in set (0.00 sec)

//使用 hive

mysql> use hive;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

//查看hive下,所有表,发现是 57 张,说明初始化成功

mysql> show tables;

+---------------------------+

| Tables_in_hive |

+---------------------------+

| AUX_TABLE |

| BUCKETING_COLS |

| CDS |

| COLUMNS_V2 |

| COMPACTION_QUEUE |

| COMPLETED_COMPACTIONS |

| COMPLETED_TXN_COMPONENTS |

| DATABASE_PARAMS |

| DBS |

| DB_PRIVS |

| DELEGATION_TOKENS |

| FUNCS |

| FUNC_RU |

| GLOBAL_PRIVS |

| HIVE_LOCKS |

| IDXS |

| INDEX_PARAMS |

| KEY_CONSTRAINTS |

| MASTER_KEYS |

| NEXT_COMPACTION_QUEUE_ID |

| NEXT_LOCK_ID |

| NEXT_TXN_ID |

| NOTIFICATION_LOG |

| NOTIFICATION_SEQUENCE |

| NUCLEUS_TABLES |

| PARTITIONS |

| PARTITION_EVENTS |

| PARTITION_KEYS |

| PARTITION_KEY_VALS |

| PARTITION_PARAMS |

| PART_COL_PRIVS |

| PART_COL_STATS |

| PART_PRIVS |

| ROLES |

| ROLE_MAP |

| SDS |

| SD_PARAMS |

| SEQUENCE_TABLE |

| SERDES |

| SERDE_PARAMS |

| SKEWED_COL_NAMES |

| SKEWED_COL_VALUE_LOC_MAP |

| SKEWED_STRING_LIST |

| SKEWED_STRING_LIST_VALUES |

| SKEWED_VALUES |

| SORT_COLS |

| TABLE_PARAMS |

| TAB_COL_STATS |

| TBLS |

| TBL_COL_PRIVS |

| TBL_PRIVS |

| TXNS |

| TXN_COMPONENTS |

| TYPES |

| TYPE_FIELDS |

| VERSION |

| WRITE_SET |

+---------------------------+

57 rows in set (0.00 sec)

————————————————

启动hive

- 使用hiveserver2启动hive,启动前需要修改hadoop代理访问权限:

找到hadoop的core-site.xml,添加如下配置:

[hadoop@bigdata-senior01 hadoop]$ pwd

/opt/modules/hadoop-2.8.5/etc/hadoop

[hadoop@bigdata-senior01 hadoop]$ vim core-site.xml

<property>

<name>hadoop.proxyuser.hadoop.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hadoop.groups</name>

<value>*</value>

</property>

————————————————

2. hive的访问方式分为两种:

1). beeline,该方式仅支持本地机器进行操作;

启动方式如下:$ bin/beeline -u jdbc:hive2://127.0.0.1:10000 -n hadoop

-n : 代理用户

-u : 请求地址

2). hiveserver2,该方式可提供不同的机器进行调用;

启动方式如下:$ bin/hiveservice2

查看是否已开放端口:netstat -ant | grep 10000

[hadoop@bigdata-senior01 apache-hive-2.3.3]$ pwd

/opt/modules/apache-hive-2.3.3

[hadoop@bigdata-senior01 apache-hive-2.3.3]$ bin/hiveserver2

which: no hbase in (/opt/modules/hadoop-2.8.5/bin:/opt/modules/hadoop-2.8.5/sbin:/opt/modules/jdk1.8.0_261/bin:/usr/local/bin:/bin:/usr/bin:/usr/local/sbin:/usr/sbin:/opt/mysql/bin:/home/hadoop/.local/bin:/home/hadoop/bin:/opt/modules/jdk1.8.0_261//bin:/opt/modules/hadoop-2.8.5//bin:/opt/modules/hadoop-2.8.5//sbin)

2022-01-04 22:58:41: Starting HiveServer2

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/modules/apache-hive-2.3.3/lib/log4j-slf4j-impl-2.6.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/modules/hadoop-2.8.5/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

————————————————

Hive(2.3.3,Hive其他版本需自行编译Linkis),安装的机器必须支持执行hive -e "show databases"命令

[hadoop@bigdata-senior01 apache-hive-2.3.3]$ hive -e "show databases"

which: no hbase in (/opt/modules/apache-hive-2.3.3/bin:/opt/modules/hadoop-2.8.5/bin:/opt/modules/hadoop-2.8.5/sbin:/opt/modules/jdk1.8.0_261/bin:/opt/modules/hadoop-2.8.5/bin:/opt/modules/hadoop-2.8.5/sbin:/opt/modules/jdk1.8.0_261/bin:/usr/local/bin:/usr/bin:/usr/local/sbin:/usr/sbin:/opt/mysql/bin:/home/hadoop/.local/bin:/home/hadoop/bin:/opt/modules/jdk1.8.0_261//bin:/opt/modules/hadoop-2.8.5//bin:/opt/modules/hadoop-2.8.5//sbin:/opt/mysql/bin)

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/modules/apache-hive-2.3.3/lib/log4j-slf4j-impl-2.6.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/modules/hadoop-2.8.5/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Logging initialized using configuration in file:/opt/modules/apache-hive-2.3.3/conf/hive-log4j2.properties Async: true

参考

集群搭建–安装apache-hive-2.3.4

装Apache Hive-2.3.3

https://blog.csdn.net/weixin_33955681/article/details/92958527)

文章目录

- DSS部署流程

-

- 第一部分、 背景

- 第二部分、准备虚拟机、环境初始化

- 第三部分、创建hadoop用户

- 第四部分、配置JDK

- 第五部分 Scala部署

- 第六部分、安装MySQL5.7.25

- 第七部分、安装python3

- 第八部分、nginx【dss会自动安装】

- 第九部分、安装hadoop(伪分布式)

- 第十部分、Hive安装部署

- 第十一部分、Spark on Yarn部署

- 第十二部分、DSS一键安装

- 第十三部分、帮助

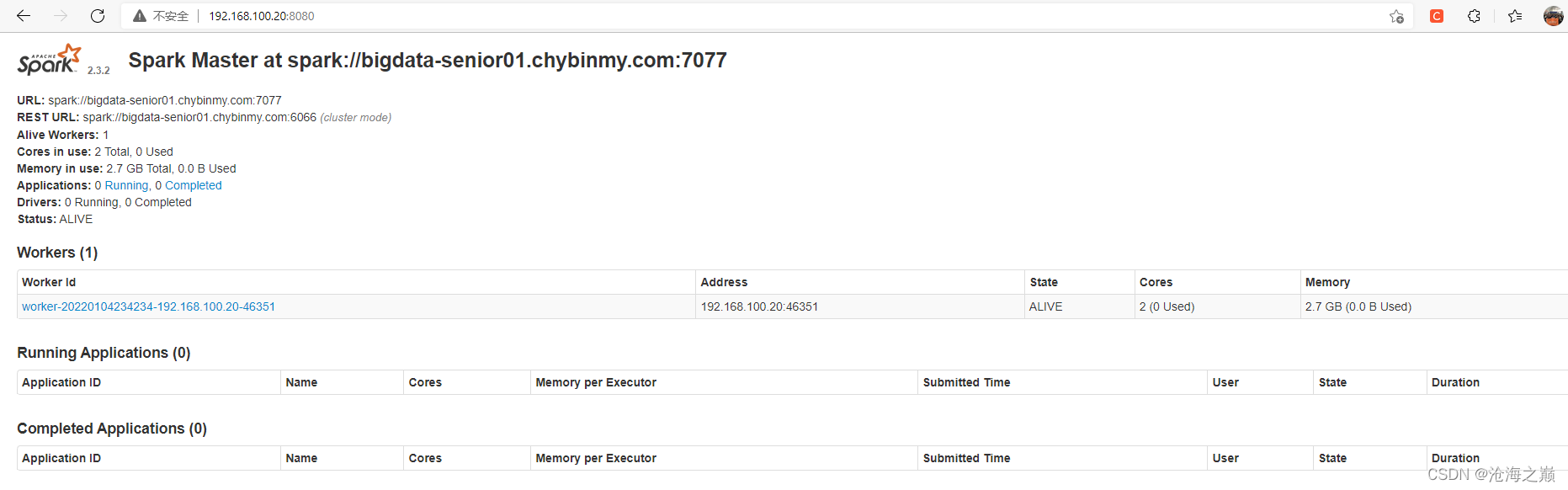

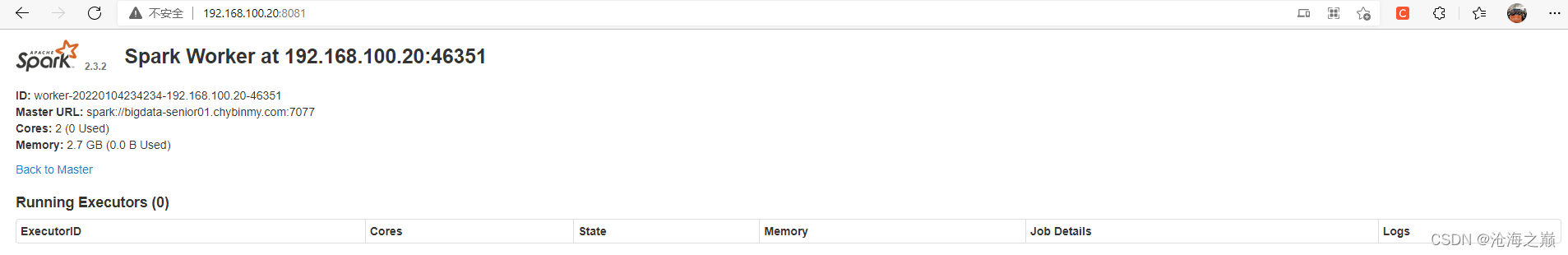

第十一部分、Spark on Yarn部署

相关配置

tar xf spark-2.3.2-bin-hadoop2.7.tgz

cd /opt/modules/spark-2.3.2-bin-hadoop2.7/conf

更改配置文件

cp spark-env.sh.template spark-env.sh

cp slaves.template slaves

vi slaves

加入localhost

vi spark-env.sh

export JAVA_HOME=/opt/modules/jdk1.8.0_261

export SPARK_HOME=/opt/modules/spark-2.3.2-bin-hadoop2.7

#Spark主节点的IP

export SPARK_MASTER_IP=hadoop

#Spark主节点的端口号

export SPARK_MASTER_PORT=7077

启动:

cd /opt/modules/spark-2.3.2-bin-hadoop2.7/sbin

./start-all.sh

#vim /etc/profile

#添加spark的环境变量,加如PATH下、export出来

#source /etc/profile

export SPARK_HOME="/opt/modules/spark-2.3.2-bin-hadoop2.7"

export SPARK_MASTER_IP=master

export SPARK_EXECUTOR_MEMORY=1G

export PATH=$SPARK_HOME/bin:$PATH

操作记录如下

[hadoop@bigdata-senior01 ~]$ cd /opt/modules/

[hadoop@bigdata-senior01 modules]$ ll

total 1478576

drwxrwxr-x 10 hadoop hadoop 184 Jan 4 22:41 apache-hive-2.3.3

-rw-r--r-- 1 hadoop hadoop 232229830 Jan 4 21:41 apache-hive-2.3.3-bin.tar.gz

drwxr-xr-x 10 hadoop hadoop 182 Jan 4 22:14 hadoop-2.8.5

-rw-r--r-- 1 hadoop hadoop 246543928 Jan 4 21:41 hadoop-2.8.5.tar.gz

drwxr-xr-x 8 hadoop hadoop 273 Jun 17 2020 jdk1.8.0_261

-rw-r--r-- 1 hadoop hadoop 143111803 Jan 4 21:41 jdk-8u261-linux-x64.tar.gz

drwxr-xr-x 2 root root 6 Jan 4 22:29 mysql-5.7.25-linux-glibc2.12-x86_64

-rw-r--r-- 1 root root 644862820 Jan 4 22:27 mysql-5.7.25-linux-glibc2.12-x86_64.tar.gz

-rw-r--r-- 1 hadoop hadoop 1006904 Jan 4 21:41 mysql-connector-java-5.1.49.jar

-rw-r--r-- 1 hadoop hadoop 20415505 Jan 4 21:41 scala-2.12.7.tgz

-rw-r--r-- 1 hadoop hadoop 225875602 Jan 4 21:41 spark-2.3.2-bin-hadoop2.7.tgz

[hadoop@bigdata-senior01 modules]$ pwd

/opt/modules

[hadoop@bigdata-senior01 modules]$ tar xf scala-2.12.7.tgz

[hadoop@bigdata-senior01 modules]$ ll

total 1478576

drwxrwxr-x 10 hadoop hadoop 184 Jan 4 22:41 apache-hive-2.3.3

-rw-r--r-- 1 hadoop hadoop 232229830 Jan 4 21:41 apache-hive-2.3.3-bin.tar.gz

drwxr-xr-x 10 hadoop hadoop 182 Jan 4 22:14 hadoop-2.8.5

-rw-r--r-- 1 hadoop hadoop 246543928 Jan 4 21:41 hadoop-2.8.5.tar.gz

drwxr-xr-x 8 hadoop hadoop 273 Jun 17 2020 jdk1.8.0_261

-rw-r--r-- 1 hadoop hadoop 143111803 Jan 4 21:41 jdk-8u261-linux-x64.tar.gz

drwxr-xr-x 2 root root 6 Jan 4 22:29 mysql-5.7.25-linux-glibc2.12-x86_64

-rw-r--r-- 1 root root 644862820 Jan 4 22:27 mysql-5.7.25-linux-glibc2.12-x86_64.tar.gz

-rw-r--r-- 1 hadoop hadoop 1006904 Jan 4 21:41 mysql-connector-java-5.1.49.jar

drwxrwxr-x 6 hadoop hadoop 50 Sep 27 2018 scala-2.12.7

-rw-r--r-- 1 hadoop hadoop 20415505 Jan 4 21:41 scala-2.12.7.tgz

-rw-r--r-- 1 hadoop hadoop 225875602 Jan 4 21:41 spark-2.3.2-bin-hadoop2.7.tgz

[hadoop@bigdata-senior01 modules]$

[hadoop@bigdata-senior01 modules]$ cd scala-2.12.7/

[hadoop@bigdata-senior01 scala-2.12.7]$ ll

total 0

drwxrwxr-x 2 hadoop hadoop 162 Sep 27 2018 bin

drwxrwxr-x 4 hadoop hadoop 86 Sep 27 2018 doc

drwxrwxr-x 2 hadoop hadoop 244 Sep 27 2018 lib

drwxrwxr-x 3 hadoop hadoop 18 Sep 27 2018 man

[hadoop@bigdata-senior01 scala-2.12.7]$ pwd

/opt/modules/scala-2.12.7

[hadoop@bigdata-senior01 scala-2.12.7]$

[hadoop@bigdata-senior01 scala-2.12.7]$ vim /etc/profile

[hadoop@bigdata-senior01 scala-2.12.7]$ sudo vim /etc/profile

[hadoop@bigdata-senior01 scala-2.12.7]$ source /etc/profile

[hadoop@bigdata-senior01 scala-2.12.7]$ echo $SCALA_HOME

/opt/modules/scala-2.12.7

[hadoop@bigdata-senior01 scala-2.12.7]$ scala

Welcome to Scala 2.12.7 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_261).

Type in expressions for evaluation. Or try :help.

scala>

scala> [hadoop@bigdata-senior01 scala-2.12.7]$

[hadoop@bigdata-senior01 scala-2.12.7]$

[hadoop@bigdata-senior01 scala-2.12.7]$

[hadoop@bigdata-senior01 scala-2.12.7]$

[hadoop@bigdata-senior01 scala-2.12.7]$

[hadoop@bigdata-senior01 scala-2.12.7]$ cd ../

[hadoop@bigdata-senior01 modules]$ ll

total 1478576

drwxrwxr-x 10 hadoop hadoop 184 Jan 4 22:41 apache-hive-2.3.3

-rw-r--r-- 1 hadoop hadoop 232229830 Jan 4 21:41 apache-hive-2.3.3-bin.tar.gz

drwxr-xr-x 10 hadoop hadoop 182 Jan 4 22:14 hadoop-2.8.5

-rw-r--r-- 1 hadoop hadoop 246543928 Jan 4 21:41 hadoop-2.8.5.tar.gz

drwxr-xr-x 8 hadoop hadoop 273 Jun 17 2020 jdk1.8.0_261

-rw-r--r-- 1 hadoop hadoop 143111803 Jan 4 21:41 jdk-8u261-linux-x64.tar.gz

drwxr-xr-x 2 root root 6 Jan 4 22:29 mysql-5.7.25-linux-glibc2.12-x86_64

-rw-r--r-- 1 root root 644862820 Jan 4 22:27 mysql-5.7.25-linux-glibc2.12-x86_64.tar.gz

-rw-r--r-- 1 hadoop hadoop 1006904 Jan 4 21:41 mysql-connector-java-5.1.49.jar

drwxrwxr-x 6 hadoop hadoop 50 Sep 27 2018 scala-2.12.7

-rw-r--r-- 1 hadoop hadoop 20415505 Jan 4 21:41 scala-2.12.7.tgz

-rw-r--r-- 1 hadoop hadoop 225875602 Jan 4 21:41 spark-2.3.2-bin-hadoop2.7.tgz

[hadoop@bigdata-senior01 modules]$ tar xf spark-2.3.2-bin-hadoop2.7.tgz

[hadoop@bigdata-senior01 modules]$ ll

total 1478576

drwxrwxr-x 10 hadoop hadoop 184 Jan 4 22:41 apache-hive-2.3.3

-rw-r--r-- 1 hadoop hadoop 232229830 Jan 4 21:41 apache-hive-2.3.3-bin.tar.gz

drwxr-xr-x 10 hadoop hadoop 182 Jan 4 22:14 hadoop-2.8.5

-rw-r--r-- 1 hadoop hadoop 246543928 Jan 4 21:41 hadoop-2.8.5.tar.gz

drwxr-xr-x 8 hadoop hadoop 273 Jun 17 2020 jdk1.8.0_261

-rw-r--r-- 1 hadoop hadoop 143111803 Jan 4 21:41 jdk-8u261-linux-x64.tar.gz

drwxr-xr-x 2 root root 6 Jan 4 22:29 mysql-5.7.25-linux-glibc2.12-x86_64

-rw-r--r-- 1 root root 644862820 Jan 4 22:27 mysql-5.7.25-linux-glibc2.12-x86_64.tar.gz

-rw-r--r-- 1 hadoop hadoop 1006904 Jan 4 21:41 mysql-connector-java-5.1.49.jar

drwxrwxr-x 6 hadoop hadoop 50 Sep 27 2018 scala-2.12.7

-rw-r--r-- 1 hadoop hadoop 20415505 Jan 4 21:41 scala-2.12.7.tgz

drwxrwxr-x 13 hadoop hadoop 211 Sep 16 2018 spark-2.3.2-bin-hadoop2.7

-rw-r--r-- 1 hadoop hadoop 225875602 Jan 4 21:41 spark-2.3.2-bin-hadoop2.7.tgz

[hadoop@bigdata-senior01 modules]$ cd spark-2.3.2-bin-hadoop2.7/

[hadoop@bigdata-senior01 spark-2.3.2-bin-hadoop2.7]$ pwd

/opt/modules/spark-2.3.2-bin-hadoop2.7

[hadoop@bigdata-senior01 spark-2.3.2-bin-hadoop2.7]$

[hadoop@bigdata-senior01 spark-2.3.2-bin-hadoop2.7]$ cd /opt/modules/spark-2.3.2-bin-hadoop2.7/conf

[hadoop@bigdata-senior01 conf]$ cp spark-env.sh.template spark-env.sh

[hadoop@bigdata-senior01 conf]$ cp slaves.template slaves

[hadoop@bigdata-senior01 conf]$ vim slaves

[hadoop@bigdata-senior01 conf]$ vi spark-env.sh

[hadoop@bigdata-senior01 conf]$ cd ../

[hadoop@bigdata-senior01 spark-2.3.2-bin-hadoop2.7]$ ll

total 84

drwxrwxr-x 2 hadoop hadoop 4096 Sep 16 2018 bin

drwxrwxr-x 2 hadoop hadoop 264 Jan 4 23:33 conf

drwxrwxr-x 5 hadoop hadoop 50 Sep 16 2018 data

drwxrwxr-x 4 hadoop hadoop 29 Sep 16 2018 examples

drwxrwxr-x 2 hadoop hadoop 12288 Sep 16 2018 jars

drwxrwxr-x 3 hadoop hadoop 25 Sep 16 2018 kubernetes

-rw-rw-r-- 1 hadoop hadoop 18045 Sep 16 2018 LICENSE

drwxrwxr-x 2 hadoop hadoop 4096 Sep 16 2018 licenses

-rw-rw-r-- 1 hadoop hadoop 26366 Sep 16 2018 NOTICE

drwxrwxr-x 8 hadoop hadoop 240 Sep 16 2018 python

drwxrwxr-x 3 hadoop hadoop 17 Sep 16 2018 R

-rw-rw-r-- 1 hadoop hadoop 3809 Sep 16 2018 README.md

-rw-rw-r-- 1 hadoop hadoop 164 Sep 16 2018 RELEASE

drwxrwxr-x 2 hadoop hadoop 4096 Sep 16 2018 sbin

drwxrwxr-x 2 hadoop hadoop 42 Sep 16 2018 yarn

[hadoop@bigdata-senior01 spark-2.3.2-bin-hadoop2.7]$ cd conf

[hadoop@bigdata-senior01 conf]$ echo ${JAVA_HOME}

/opt/modules/jdk1.8.0_261

[hadoop@bigdata-senior01 conf]$

[hadoop@bigdata-senior01 conf]$ pwd

/opt/modules/spark-2.3.2-bin-hadoop2.7/conf

[hadoop@bigdata-senior01 conf]$

[hadoop@bigdata-senior01 conf]$ vim spark-env.sh

[hadoop@bigdata-senior01 conf]$ cd ../

[hadoop@bigdata-senior01 spark-2.3.2-bin-hadoop2.7]$ ll

total 84

drwxrwxr-x 2 hadoop hadoop 4096 Sep 16 2018 bin

drwxrwxr-x 2 hadoop hadoop 264 Jan 4 23:35 conf

drwxrwxr-x 5 hadoop hadoop 50 Sep 16 2018 data

drwxrwxr-x 4 hadoop hadoop 29 Sep 16 2018 examples

drwxrwxr-x 2 hadoop hadoop 12288 Sep 16 2018 jars

drwxrwxr-x 3 hadoop hadoop 25 Sep 16 2018 kubernetes

-rw-rw-r-- 1 hadoop hadoop 18045 Sep 16 2018 LICENSE

drwxrwxr-x 2 hadoop hadoop 4096 Sep 16 2018 licenses

-rw-rw-r-- 1 hadoop hadoop 26366 Sep 16 2018 NOTICE

drwxrwxr-x 8 hadoop hadoop 240 Sep 16 2018 python

drwxrwxr-x 3 hadoop hadoop 17 Sep 16 2018 R

-rw-rw-r-- 1 hadoop hadoop 3809 Sep 16 2018 README.md

-rw-rw-r-- 1 hadoop hadoop 164 Sep 16 2018 RELEASE

drwxrwxr-x 2 hadoop hadoop 4096 Sep 16 2018 sbin

drwxrwxr-x 2 hadoop hadoop 42 Sep 16 2018 yarn

[hadoop@bigdata-senior01 spark-2.3.2-bin-hadoop2.7]$ cd sbin

[hadoop@bigdata-senior01 sbin]$ ./start-all.sh

starting org.apache.spark.deploy.master.Master, logging to /opt/modules/spark-2.3.2-bin-hadoop2.7/logs/spark-hadoop- org.apache.spark.deploy.master.Master-1-bigdata-senior01.chybinmy.com.out

localhost: Warning: Permanently added 'localhost' (ECDSA) to the list of known hosts.

hadoop@localhost's password:

hadoop@localhost's password: localhost: Permission denied, please try again.

hadoop@localhost's password: localhost: Permission denied, please try again.

localhost: Permission denied (publickey,gssapi-keyex,gssapi-with-mic,password).

[hadoop@bigdata-senior01 sbin]$

[hadoop@bigdata-senior01 sbin]$

[hadoop@bigdata-senior01 sbin]$ jps

18898 NodeManager

96757 Jps

17144 DataNode

18600 ResourceManager

16939 NameNode

21277 JobHistoryServer

17342 SecondaryNameNode

95983 Master

[hadoop@bigdata-senior01 sbin]$ vim /etc/profile

[hadoop@bigdata-senior01 sbin]$ sudo vim /etc/profile

[hadoop@bigdata-senior01 sbin]$ source /etc/profile

[hadoop@bigdata-senior01 sbin]$ ./start-all.sh

org.apache.spark.deploy.master.Master running as process 95983. Stop it first.

hadoop@localhost's password:

localhost: starting org.apache.spark.deploy.worker.Worker, logging to /opt/modules/spark-2.3.2-bin-hadoop2.7/logs/sp ark-hadoop-org.apache.spark.deploy.worker.Worker-1-bigdata-senior01.chybinmy.com.out

[hadoop@bigdata-senior01 sbin]$ cat /opt/modules/spark-2.3.2-bin-hadoop2.7/logs/spark-hadoop-org.apache.spark.deploy .worker.Worker-1-bigdata-senior01.chybinmy.com.out

Spark Command: /opt/modules/jdk1.8.0_261/bin/java -cp /opt/modules/spark-2.3.2-bin-hadoop2.7/conf/:/opt/modules/spar k-2.3.2-bin-hadoop2.7/jars/* -Xmx1g org.apache.spark.deploy.worker.Worker --webui-port 8081 spark://bigdata-senior01 .chybinmy.com:7077

========================================

2022-01-04 23:42:32 INFO Worker:2612 - Started daemon with process name: [email protected]

2022-01-04 23:42:32 INFO SignalUtils:54 - Registered signal handler for TERM

2022-01-04 23:42:32 INFO SignalUtils:54 - Registered signal handler for HUP

2022-01-04 23:42:32 INFO SignalUtils:54 - Registered signal handler for INT

2022-01-04 23:42:33 WARN NativeCodeLoader:62 - Unable to load native-hadoop library for your platform... using buil tin-java classes where applicable

2022-01-04 23:42:33 INFO SecurityManager:54 - Changing view acls to: hadoop

2022-01-04 23:42:33 INFO SecurityManager:54 - Changing modify acls to: hadoop

2022-01-04 23:42:33 INFO SecurityManager:54 - Changing view acls groups to:

2022-01-04 23:42:33 INFO SecurityManager:54 - Changing modify acls groups to:

2022-01-04 23:42:33 INFO SecurityManager:54 - SecurityManager: authentication disabled; ui acls disabled; users wi th view permissions: Set(hadoop); groups with view permissions: Set(); users with modify permissions: Set(hadoop); groups with modify permissions: Set()

2022-01-04 23:42:34 INFO Utils:54 - Successfully started service 'sparkWorker' on port 46351.

2022-01-04 23:42:34 INFO Worker:54 - Starting Spark worker 192.168.100.20:46351 with 2 cores, 2.7 GB RAM

2022-01-04 23:42:34 INFO Worker:54 - Running Spark version 2.3.2

2022-01-04 23:42:34 INFO Worker:54 - Spark home: /opt/modules/spark-2.3.2-bin-hadoop2.7

2022-01-04 23:42:35 INFO log:192 - Logging initialized @4458ms

2022-01-04 23:42:35 INFO Server:351 - jetty-9.3.z-SNAPSHOT, build timestamp: unknown, git hash: unknown

2022-01-04 23:42:35 INFO Server:419 - Started @4642ms

2022-01-04 23:42:35 INFO AbstractConnector:278 - Started ServerConnector@6de6ec17{HTTP/1.1,[http/1.1]}{0.0.0.0:8081 }

2022-01-04 23:42:35 INFO Utils:54 - Successfully started service 'WorkerUI' on port 8081.

2022-01-04 23:42:35 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@6cd8d218{/logPage,null,AVAILABL E,@Spark}

2022-01-04 23:42:35 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@265b9144{/logPage/json,null,AVA ILABLE,@Spark}

2022-01-04 23:42:35 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@460a8f36{/,null,AVAILABLE,@Spar k}

2022-01-04 23:42:35 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@732d2fc5{/json,null,AVAILABLE,@ Spark}

2022-01-04 23:42:35 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@9864155{/static,null,AVAILABLE, @Spark}

2022-01-04 23:42:35 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@a5fd0ba{/log,null,AVAILABLE,@Sp ark}

2022-01-04 23:42:35 INFO WorkerWebUI:54 - Bound WorkerWebUI to 0.0.0.0, and started at http://bigdata-senior01.chyb inmy.com:8081

2022-01-04 23:42:35 INFO Worker:54 - Connecting to master bigdata-senior01.chybinmy.com:7077...

2022-01-04 23:42:35 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@1c2b0b0e{/metrics/json,null,AVA ILABLE,@Spark}

2022-01-04 23:42:35 INFO TransportClientFactory:267 - Successfully created connection to bigdata-senior01.chybinmy. com/192.168.100.20:7077 after 156 ms (0 ms spent in bootstraps)

2022-01-04 23:42:36 INFO Worker:54 - Successfully registered with master spark://bigdata-senior01.chybinmy.com:7077

[hadoop@bigdata-senior01 sbin]$

[hadoop@bigdata-senior01 sbin]$

[hadoop@bigdata-senior01 sbin]$

访问地址:

http://192.168.100.20:8080/

http://192.168.100.20:8081/

Spark(支持2.0以上所有版本) ,安装的机器必须支持执行spark-sql -e “show databases” 命令

$

[hadoop@bigdata-senior01 sbin]$ spark-sql -e "show databases"

2022-01-04 23:49:13 WARN NativeCodeLoader:62 - Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2022-01-04 23:49:14 INFO HiveMetaStore:589 - 0: Opening raw store with implemenation class:org.apache.hadoop.hive.metastore.ObjectStore

2022-01-04 23:49:14 INFO ObjectStore:289 - ObjectStore, initialize called

2022-01-04 23:49:15 INFO Persistence:77 - Property hive.metastore.integral.jdo.pushdown unknown - will be ignored

2022-01-04 23:49:15 INFO Persistence:77 - Property datanucleus.cache.level2 unknown - will be ignored

2022-01-04 23:49:18 INFO ObjectStore:370 - Setting MetaStore object pin classes with hive.metastore.cache.pinobjtypes="Table,StorageDescriptor,SerDeInfo,Partition,Database,Type,FieldSchema,Order"

2022-01-04 23:49:20 INFO Datastore:77 - The class "org.apache.hadoop.hive.metastore.model.MFieldSchema" is tagged as "embedded-only" so does not have its own datastore table.

2022-01-04 23:49:20 INFO Datastore:77 - The class "org.apache.hadoop.hive.metastore.model.MOrder" is tagged as "embedded-only" so does not have its own datastore table.

2022-01-04 23:49:21 INFO Datastore:77 - The class "org.apache.hadoop.hive.metastore.model.MFieldSchema" is tagged as "embedded-only" so does not have its own datastore table.

2022-01-04 23:49:21 INFO Datastore:77 - The class "org.apache.hadoop.hive.metastore.model.MOrder" is tagged as "embedded-only" so does not have its own datastore table.

2022-01-04 23:49:21 INFO MetaStoreDirectSql:139 - Using direct SQL, underlying DB is DERBY

2022-01-04 23:49:21 INFO ObjectStore:272 - Initialized ObjectStore

2022-01-04 23:49:21 WARN ObjectStore:6666 - Version information not found in metastore. hive.metastore.schema.verification is not enabled so recording the schema version 1.2.0

2022-01-04 23:49:21 WARN ObjectStore:568 - Failed to get database default, returning NoSuchObjectException

2022-01-04 23:49:22 INFO HiveMetaStore:663 - Added admin role in metastore

2022-01-04 23:49:22 INFO HiveMetaStore:672 - Added public role in metastore

2022-01-04 23:49:22 INFO HiveMetaStore:712 - No user is added in admin role, since config is empty

2022-01-04 23:49:22 INFO HiveMetaStore:746 - 0: get_all_databases

2022-01-04 23:49:22 INFO audit:371 - ugi=hadoop ip=unknown-ip-addr cmd=get_all_databases

2022-01-04 23:49:22 INFO HiveMetaStore:746 - 0: get_functions: db=default pat=*

2022-01-04 23:49:22 INFO audit:371 - ugi=hadoop ip=unknown-ip-addr cmd=get_functions: db=default pat=*

2022-01-04 23:49:22 INFO Datastore:77 - The class "org.apache.hadoop.hive.metastore.model.MResourceUri" is tagged as "embedded-only" so does not have its own datastore table.

2022-01-04 23:49:23 INFO SessionState:641 - Created HDFS directory: /tmp/hive/hadoop

2022-01-04 23:49:23 INFO SessionState:641 - Created local directory: /tmp/17c2283a-5ee4-41a0-88b7-54b3ec12cd9f_resources

2022-01-04 23:49:23 INFO SessionState:641 - Created HDFS directory: /tmp/hive/hadoop/17c2283a-5ee4-41a0-88b7-54b3ec12cd9f

2022-01-04 23:49:23 INFO SessionState:641 - Created local directory: /tmp/hadoop/17c2283a-5ee4-41a0-88b7-54b3ec12cd9f

2022-01-04 23:49:23 INFO SessionState:641 - Created HDFS directory: /tmp/hive/hadoop/17c2283a-5ee4-41a0-88b7-54b3ec12cd9f/_tmp_space.db

2022-01-04 23:49:23 INFO SparkContext:54 - Running Spark version 2.3.2

2022-01-04 23:49:23 INFO SparkContext:54 - Submitted application: SparkSQL::192.168.100.20

2022-01-04 23:49:23 INFO SecurityManager:54 - Changing view acls to: hadoop

2022-01-04 23:49:23 INFO SecurityManager:54 - Changing modify acls to: hadoop

2022-01-04 23:49:23 INFO SecurityManager:54 - Changing view acls groups to:

2022-01-04 23:49:23 INFO SecurityManager:54 - Changing modify acls groups to:

2022-01-04 23:49:23 INFO SecurityManager:54 - SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hadoop); groups with view permissions: Set(); users with modify permissions: Set(hadoop); groups with modify permissions: Set()

2022-01-04 23:49:24 INFO Utils:54 - Successfully started service 'sparkDriver' on port 37135.

2022-01-04 23:49:24 INFO SparkEnv:54 - Registering MapOutputTracker

2022-01-04 23:49:24 INFO SparkEnv:54 - Registering BlockManagerMaster

2022-01-04 23:49:24 INFO BlockManagerMasterEndpoint:54 - Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information

2022-01-04 23:49:24 INFO BlockManagerMasterEndpoint:54 - BlockManagerMasterEndpoint up

2022-01-04 23:49:24 INFO DiskBlockManager:54 - Created local directory at /tmp/blockmgr-e68cfdc9-adda-400b-beac-f4441481481c

2022-01-04 23:49:24 INFO MemoryStore:54 - MemoryStore started with capacity 366.3 MB

2022-01-04 23:49:24 INFO SparkEnv:54 - Registering OutputCommitCoordinator

2022-01-04 23:49:24 INFO log:192 - Logging initialized @14831ms

2022-01-04 23:49:25 INFO Server:351 - jetty-9.3.z-SNAPSHOT, build timestamp: unknown, git hash: unknown

2022-01-04 23:49:25 INFO Server:419 - Started @15163ms

2022-01-04 23:49:25 INFO AbstractConnector:278 - Started ServerConnector@7d0cd23c{HTTP/1.1,[http/1.1]}{0.0.0.0:4040}

2022-01-04 23:49:25 INFO Utils:54 - Successfully started service 'SparkUI' on port 4040.

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@130cfc47{/jobs,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@4584304{/jobs/json,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@51888019{/jobs/job,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@19b5214b{/jobs/job/json,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@5fb3111a{/stages,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@4aaecabd{/stages/json,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@23bd0c81{/stages/stage,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@6889f56f{/stages/stage/json,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@231b35fb{/stages/pool,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@26da1ba2{/stages/pool/json,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@3820cfe{/storage,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@2407a36c{/storage/json,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@5ec9eefa{/storage/rdd,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@28b8f98a{/storage/rdd/json,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@3b4ef59f{/environment,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@22cb3d59{/environment/json,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@33e4b9c4{/executors,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@5cff729b{/executors/json,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@10d18696{/executors/threadDump,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@6b8b5020{/executors/threadDump/json,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@7d37ee0c{/static,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@67f946c3{/,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@21b51e59{/api,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@4f664bee{/jobs/job/kill,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@76563ae7{/stages/stage/kill,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO SparkUI:54 - Bound SparkUI to 0.0.0.0, and started at http://bigdata-senior01.chybinmy.com:4040

2022-01-04 23:49:25 INFO Executor:54 - Starting executor ID driver on host localhost

2022-01-04 23:49:25 INFO Utils:54 - Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 43887.

2022-01-04 23:49:25 INFO NettyBlockTransferService:54 - Server created on bigdata-senior01.chybinmy.com:43887

2022-01-04 23:49:25 INFO BlockManager:54 - Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy

2022-01-04 23:49:25 INFO BlockManagerMaster:54 - Registering BlockManager BlockManagerId(driver, bigdata-senior01.chybinmy.com, 43887, None)

2022-01-04 23:49:25 INFO BlockManagerMasterEndpoint:54 - Registering block manager bigdata-senior01.chybinmy.com:43887 with 366.3 MB RAM, BlockManagerId(driver, bigdata-senior01.chybinmy.com, 43887, None)

2022-01-04 23:49:25 INFO BlockManagerMaster:54 - Registered BlockManager BlockManagerId(driver, bigdata-senior01.chybinmy.com, 43887, None)

2022-01-04 23:49:25 INFO BlockManager:54 - Initialized BlockManager: BlockManagerId(driver, bigdata-senior01.chybinmy.com, 43887, None)

2022-01-04 23:49:26 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@35ac9ebd{/metrics/json,null,AVAILABLE,@Spark}

2022-01-04 23:49:26 INFO SharedState:54 - Setting hive.metastore.warehouse.dir ('null') to the value of spark.sql.warehouse.dir ('file:/opt/modules/spark-2.3.2-bin-hadoop2.7/sbin/spark-warehouse').

2022-01-04 23:49:26 INFO SharedState:54 - Warehouse path is 'file:/opt/modules/spark-2.3.2-bin-hadoop2.7/sbin/spark-warehouse'.

2022-01-04 23:49:26 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@120350eb{/SQL,null,AVAILABLE,@Spark}

2022-01-04 23:49:26 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@2ccc9681{/SQL/json,null,AVAILABLE,@Spark}

2022-01-04 23:49:26 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@aa752bb{/SQL/execution,null,AVAILABLE,@Spark}

2022-01-04 23:49:26 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@77fc19cf{/SQL/execution/json,null,AVAILABLE,@Spark}

2022-01-04 23:49:26 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@2e45a357{/static/sql,null,AVAILABLE,@Spark}

2022-01-04 23:49:26 INFO HiveUtils:54 - Initializing HiveMetastoreConnection version 1.2.1 using Spark classes.

2022-01-04 23:49:26 INFO HiveClientImpl:54 - Warehouse location for Hive client (version 1.2.2) is file:/opt/modules/spark-2.3.2-bin-hadoop2.7/sbin/spark-warehouse

2022-01-04 23:49:26 INFO metastore:291 - Mestastore configuration hive.metastore.warehouse.dir changed from /user/hive/warehouse to file:/opt/modules/spark-2.3.2-bin-hadoop2.7/sbin/spark-warehouse

2022-01-04 23:49:26 INFO HiveMetaStore:746 - 0: Shutting down the object store...